Decision Support for Sharing Data Using Differential Privacy

Mark F. St. John, Grit Denker, Peeter Laud, Karsten Martiny, Alisa Pankova, Dusko Pavlovic

External link (DOI)

View presentation:2021-10-27T15:40:00ZGMT-0600Change your timezone on the schedule page

2021-10-27T15:40:00Z

Fast forward

Direct link to video on YouTube: https://youtu.be/1IBJU1ZK3s8

Abstract

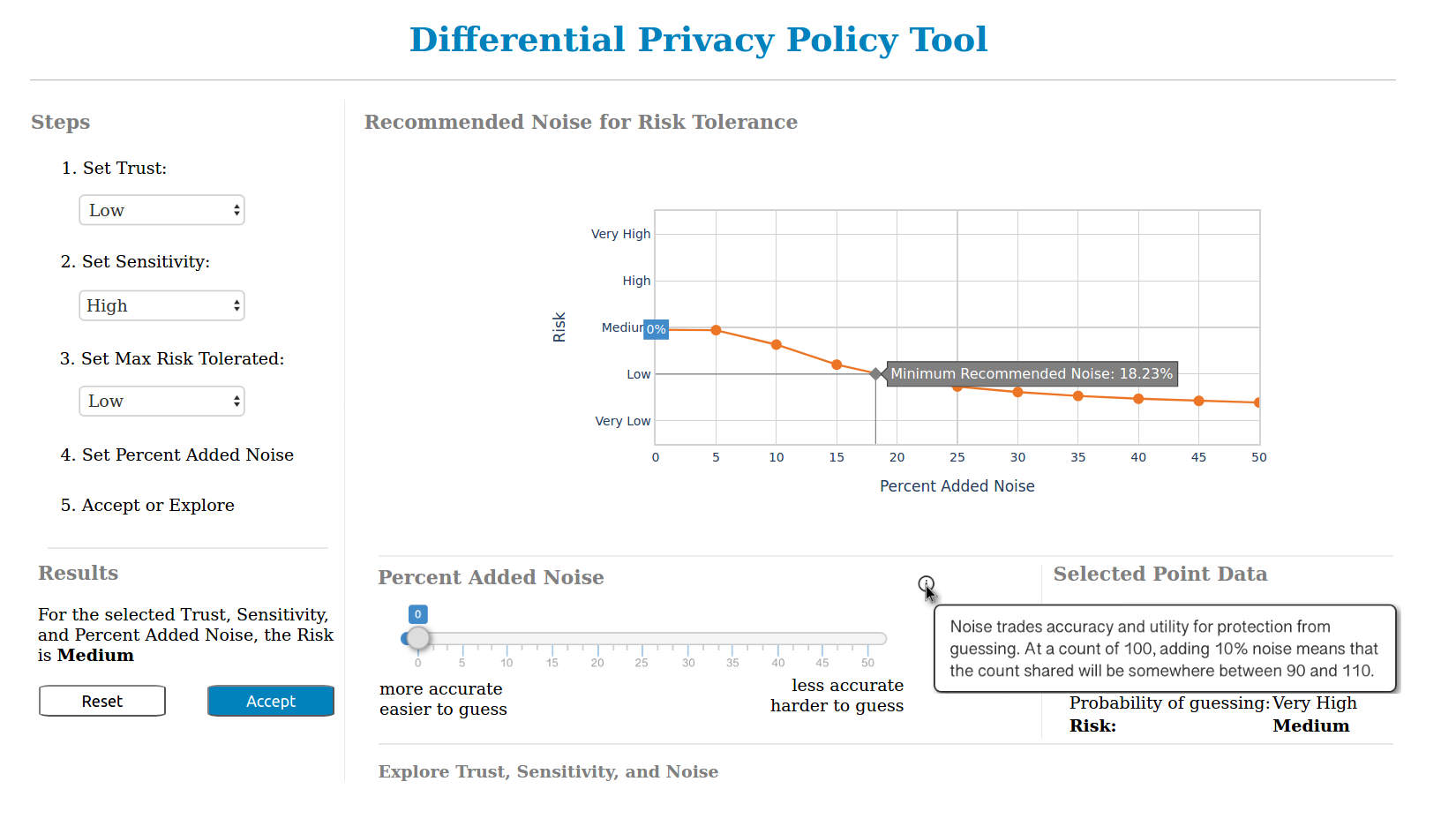

Owners of data may wish to share some statistics with others, but they may be worried of privacy of the underlying data. An effective solution to this problem is to employ provable privacy techniques, such as differential privacy, to add noise to the statistics before releasing them. This protection lowers the risk of sharing sensitive data with more or less trusted data sharing partners. Unfortunately, applying differential privacy in its mathematical form requires one to fix certain numeric parameters, which involves subtle computations and expert knowledge that the data owners may lack.