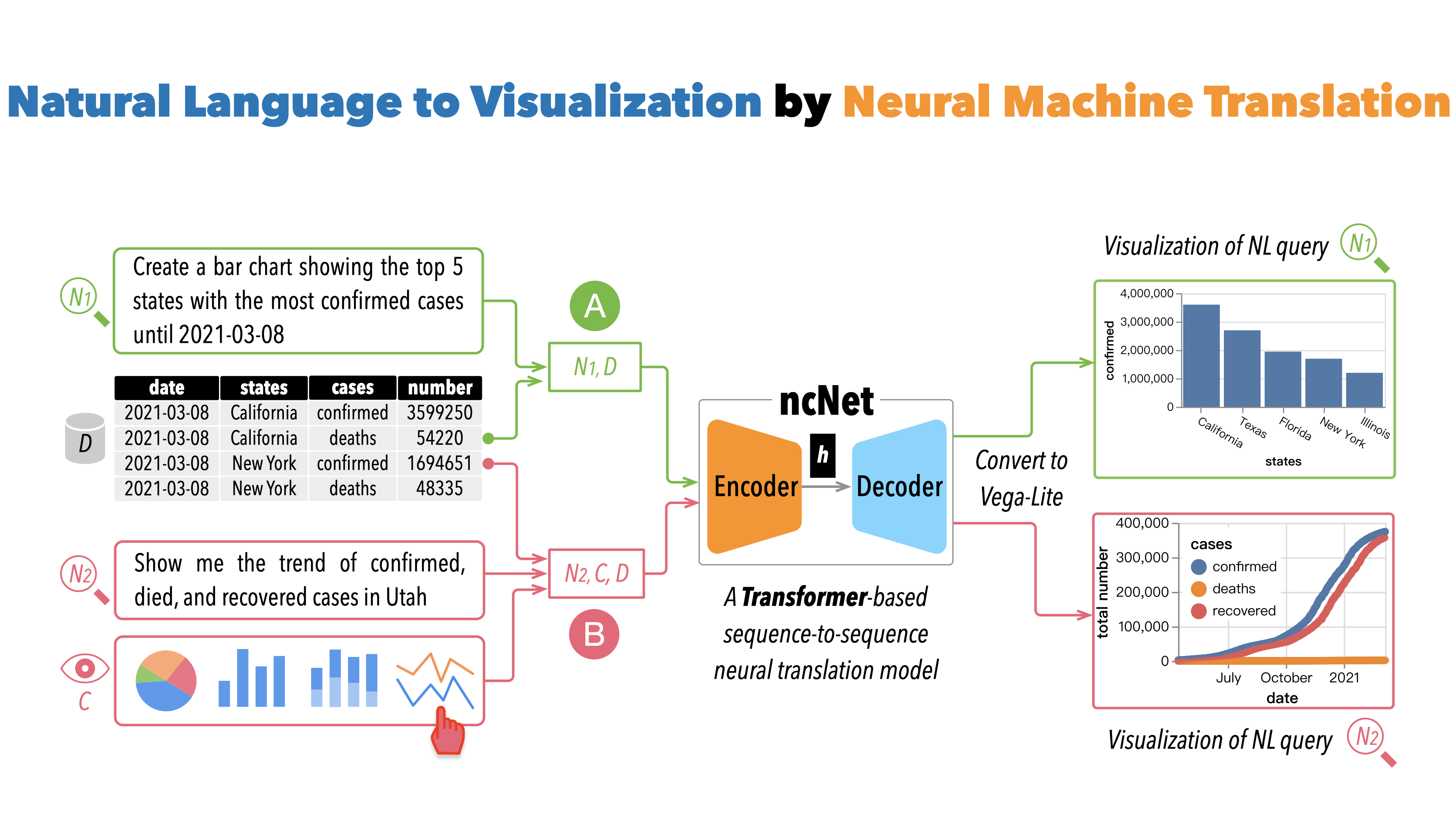

Natural Language to Visualization by Neural Machine Translation

Yuyu Luo, Nan Tang, Guoliang Li, Jiawei Tang, Chengliang Chai, Xuedi Qin

External link (DOI)

View presentation:2021-10-28T13:45:00ZGMT-0600Change your timezone on the schedule page

2021-10-28T13:45:00Z

Abstract

Supporting the translation from natural language (NL) to visualization (NL2VIS) can simplify the creation of data visualizations, because if successful, anyone can generate visualizations by their natural language. The state-of-the-art NL2VIS approaches (e.g., NL4DV and FlowSense) are based on semantic parsers and heuristic algorithms, which are not end-to-end and are not designed for supporting (possibly) complex data transformations. Deep neural network powered neural machine translation models have made great strides in many machine translation tasks, which suggests that they might be viable for NL2VIS as well. In this paper, we present ncNet, a Transformer-based sequence-to-sequence model for supporting NL2VIS, with several novel visualization-aware optimizations, including using attention-forcing to optimize the learning process, and visualization-aware rendering to produce better visualization result. To enhance the capability of machine to comprehend natural language queries, ncNet is also designed to take an optional chart template (e.g., a pie chart or a scatter plot) as input, where the chart template will be served as a constraint to limit what could be visualized. We conducted both quantitative evaluation and user study, showing that neural machine translation techniques are easy-to-use and achieve good accuracy that is comparable with the state-of-the-art NL2SQL result.