An Exploration And Validation of Visual Factors in Understanding Classification Rule Sets

Jun Yuan, Oded Nov, Enrico Bertini

External link (DOI)

View presentation:2021-10-27T15:10:00ZGMT-0600Change your timezone on the schedule page

2021-10-27T15:10:00Z

Keywords

Machine Learning, Statistics, Modelling, and Simulation Applications, Visual Representation Design, Human-Subjects Quantitative Studies, Tabular Data

Abstract

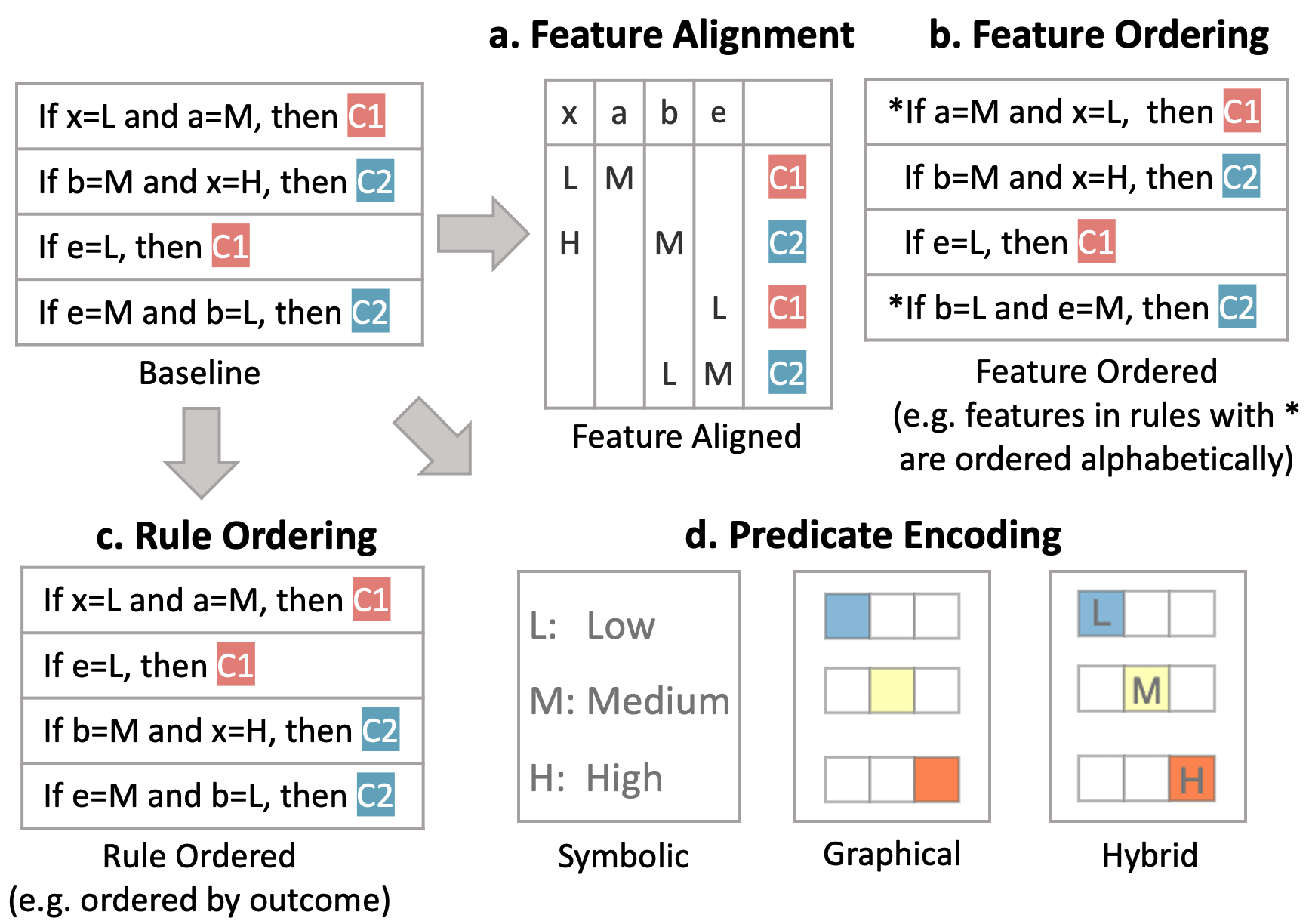

Rule sets are often used in Machine Learning (ML) as a way to communicate the model logic in settings where transparency and intelligibility are necessary. Rule sets are typically presented as a text-based list of logical statements (rules). Surprisingly, to date there has been limited work on exploring visual alternatives for presenting rules. In this paper, we explore the idea of designing alternative representations of rules, focusing on a number of visual factors we believe have a positive impact on rule readability and understanding. We then presents a user study exploring their impact. The results show that some design factors have a strong impact on how efficiently readers can process the rules while having minimal impact on accuracy. This work can help practitioners employ more effective solutions when using rules as a communication strategy to understand ML models.