Conceptualizing Visual Analytic Interventions for Content Moderation

Sahaj Vaidya, Jie Cai, Soumyadeep Basu, Azadeh Naderi, Donghee Yvette Wohn, Aritra Dasgupta

External link (DOI)

View presentation:2021-10-27T13:50:00ZGMT-0600Change your timezone on the schedule page

2021-10-27T13:50:00Z

Keywords

Social Science, Education, Humanities, Journalism, Intelligence Analysis, Knowledge Work, Task Abstractions & Application Domains

Abstract

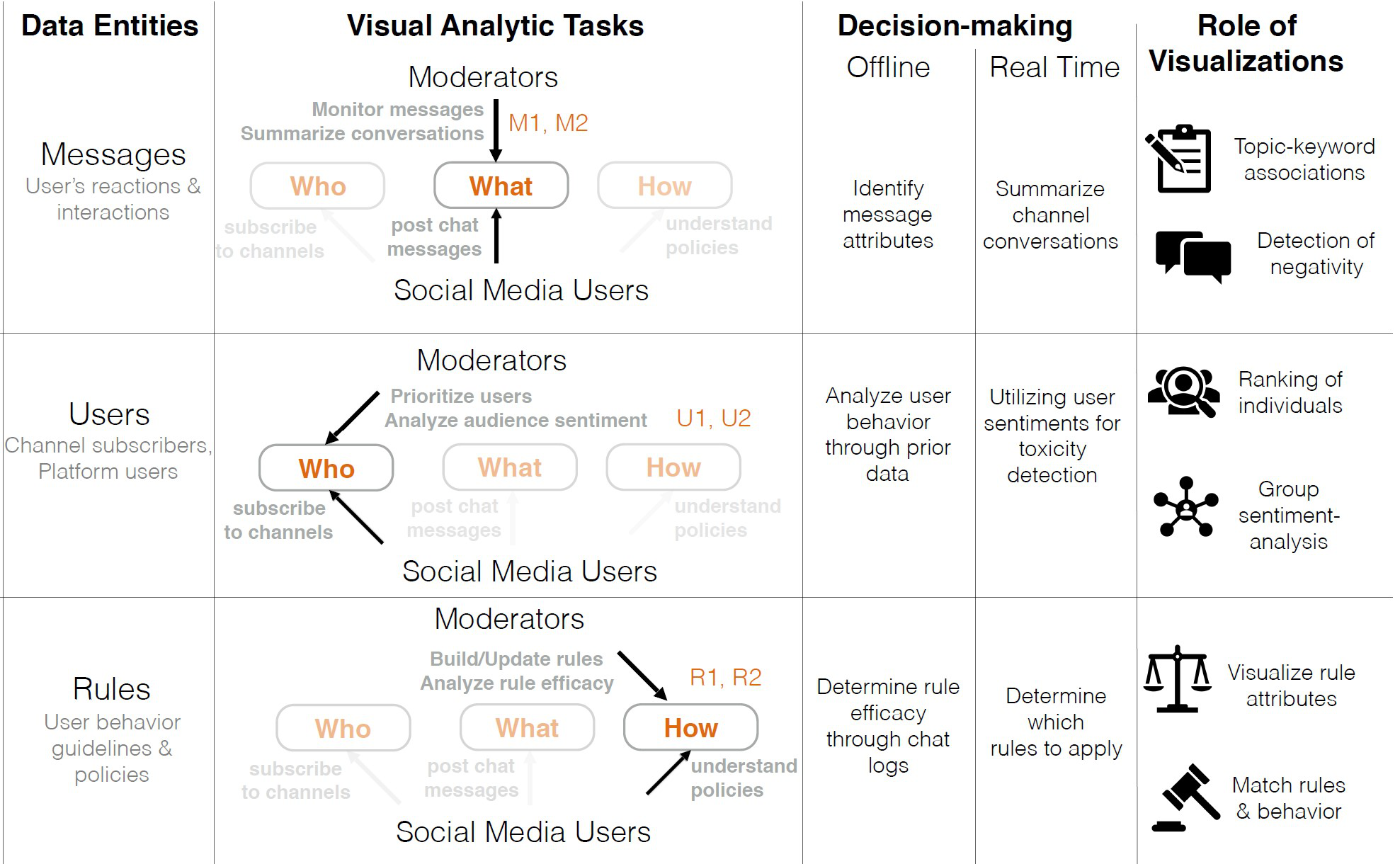

Our work introduces a visual analytic task abstraction framework for addressing data-driven problems in proactive content moderation. We also discuss the implications of the framework for influencing the future of transparent and communicative moderation practices through visual analytic solutions. As a next step, we plan to realize our proposed visual analytic tasks within existing content moderation workflows. We will also conduct empirical studies to evaluate the effectiveness of the visual analytic interventions and the resulting human-machine interfaces in reducing the cognitive load and emotional stress of content moderators.