AdViCE: Aggregated Visual Counterfactual Explanations for Machine Learning Model Validation

Steffen Holter, Oscar Alejandro Gomez, Jun Yuan, Enrico Bertini

External link (DOI)

View presentation:2021-10-27T16:00:00ZGMT-0600Change your timezone on the schedule page

2021-10-27T16:00:00Z

Keywords

Domain Agnostic, Charts, Diagrams, and Plots, Coordinated and Multiple Views, Other Topics and Techniques, Data Analysis, Reasoning, Problem Solving, and Decision Making, Algorithms, Interaction Design, Process/Workflow Design, Visual Representation Design, Tabular Data

Abstract

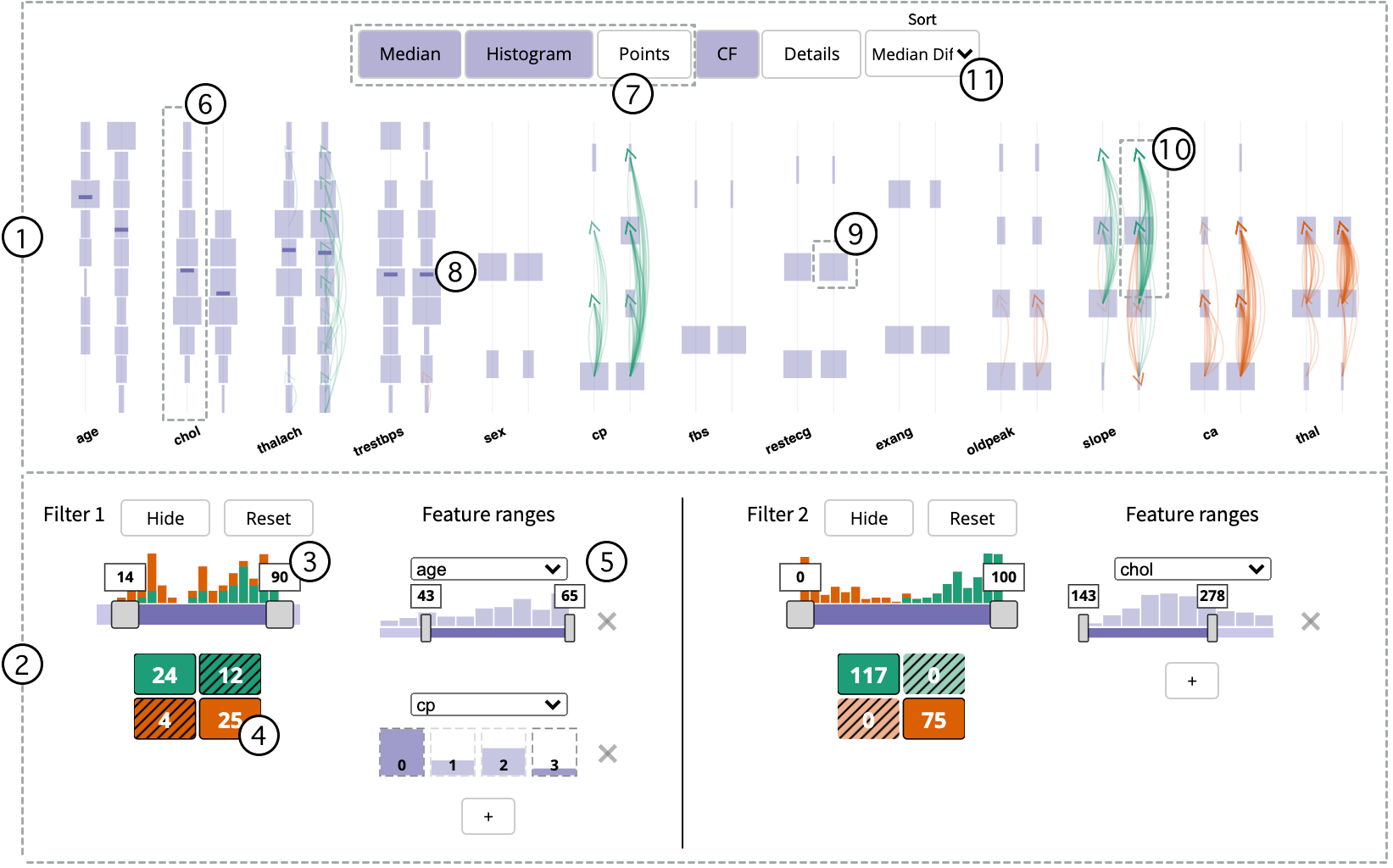

Rapid improvements in the performance of machine learning models has pushed them to the forefront of data-driven decision-making. Meanwhile, the increased integration of these models into various application domains has further highlighted the need for greater interpretability and transparency. To identify problems such as bias, overfitting, and incorrect correlations, data scientists require tools that explain the mechanisms with which these model decisions are made. In this paper we introduce AdViCE, a visual analytics tool that aims to guide users in black-box model debugging and validation. The solution rests on two main visual user interface innovations: (1) an interactive visualization design that enables the comparison of decisions on user-defined data subsets; (2) an algorithm and visual design to compute and visualize counterfactual explanations - explanations that depict model outcomes when data features are perturbed from their original values. Furthermore, we provide an evaluation of the work through a number of use cases that demonstrate the capabilities and potential limitations of the proposed approach.