A State-of-the-Art Survey of Tasks for Tree Design and Evaluation with a Curated Task Dataset

Aditeya Pandey, Uzma Syeda, Chaitya Shah, John Gomez, Michelle Borkin

External link (DOI)

View presentation:2021-10-28T17:30:00ZGMT-0600Change your timezone on the schedule page

2021-10-28T17:30:00Z

Fast forward

Direct link to video on YouTube: https://youtu.be/lGcF0OjRFJE

Keywords

STAR, Survey, Tree, Tasks, Task Abstraction, Theory, Dataset

Abstract

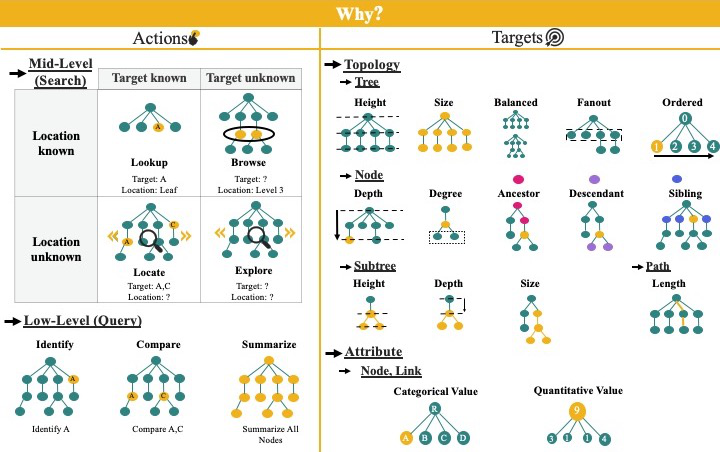

In the field of information visualization, the concept of ``tasks'' is an essential component of theories and methodologies for how a visualization researcher or a practitioner understands what tasks a user needs to perform and how to approach the creation of a new design. In this paper, we focus on the collection of tasks for tree visualizations, a common visual encoding in many domains ranging from biology to computer science to geography. In spite of their commonality, no prior efforts exist to collect and abstractly define tree visualization tasks. We present a literature review of tree visualization papers and generate a curated dataset of over 200 tasks. To enable effective task abstraction for trees, we also contribute a novel extension of the Multi-Level Task Typology to include more specificity to support tree-specific tasks as well as a systematic procedure to conduct task abstractions for tree visualizations. All tasks in the dataset were abstracted with the novel typology extension and analyzed to gain a better understanding of the state of tree visualizations. These abstracted tasks can benefit visualization researchers and practitioners as they design evaluation studies or compare their analytical tasks with ones previously studied in the literature to make informed decisions about their design. We also reflect on our novel methodology and advocate more broadly for the creation of task-based knowledge repositories for different types of visualizations. The Supplemental Material will be maintained on OSF: https://osf.io/u5eh