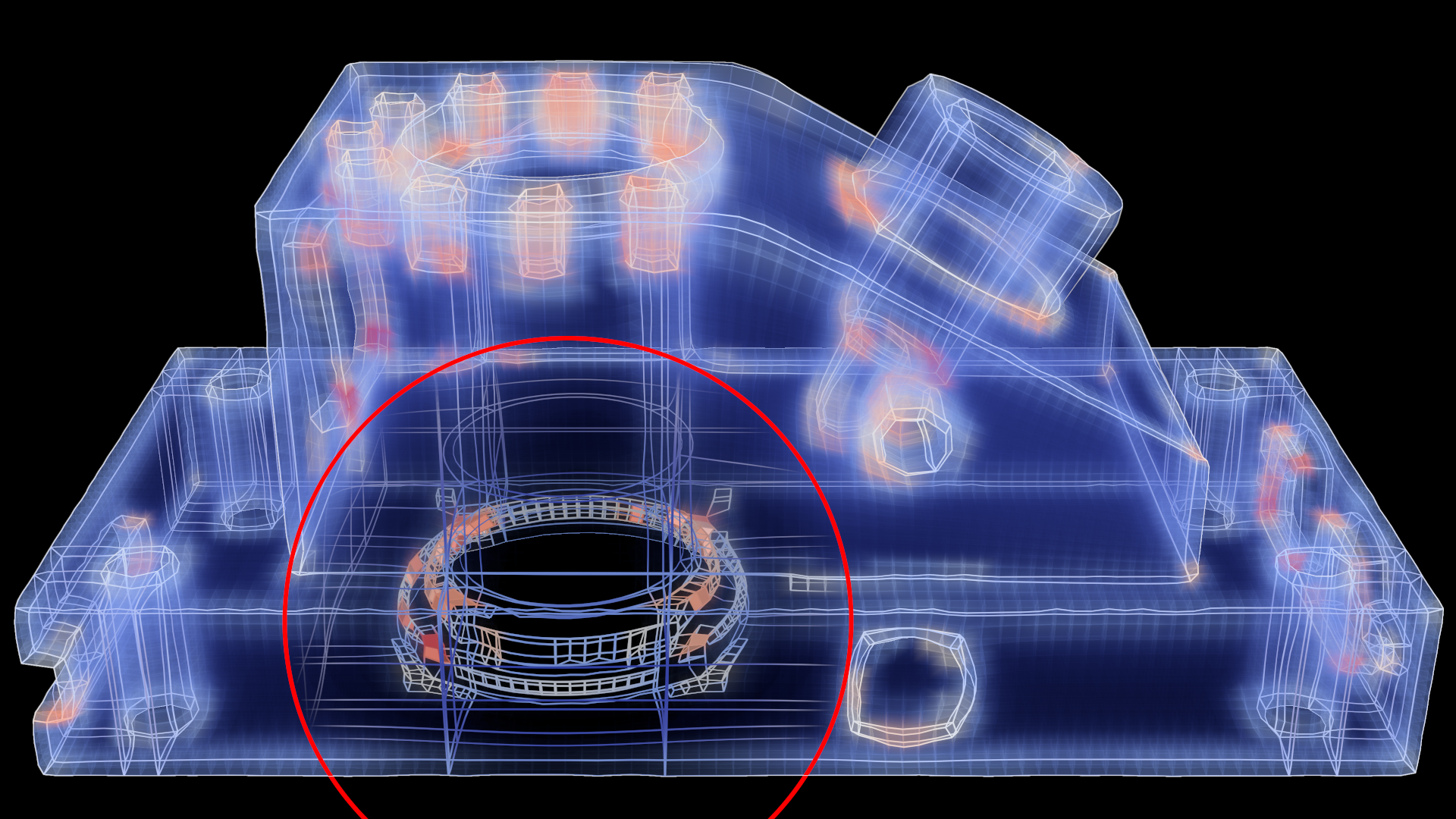

Interactive Focus+Context Rendering for Hexahedral Mesh Inspection

Christoph Neuhauser, Junpeng Wang, Rüdiger Westermann

External link (DOI)

View presentation:2021-10-28T15:00:00ZGMT-0600Change your timezone on the schedule page

2021-10-28T15:00:00Z

Fast forward

Direct link to video on YouTube: https://youtu.be/SjM4kUzl8Xw

Keywords

Visualization of Hex-Meshes, Real-Time Rendering, GPUs

Abstract

The visual inspection of a hexahedral mesh with respect to element quality is difficult due to clutter and occlusions that are produced when rendering all element faces or their edges simultaneously. Current approaches overcome this problem by using focus on specific elements that are then rendered opaque, and carving away all elements occluding their view. In this work, we make use of advanced GPU shader functionality to generate a focus+context rendering that highlights the elements in a selected region and simultaneously conveys the global mesh structure and deformation field. To achieve this, we propose a gradual transition from edge-based focus rendering to volumetric context rendering, by combining fragment shader-based edge and face rendering with per-pixel fragment lists. A fragment shader smoothly transitions between wireframe and face-based rendering, including focus-dependent rendering style and depth-dependent edge thickness and halos, and per-pixel fragment lists are used to blend fragments in correct visibility order. To maintain the global mesh structure in the context regions, we propose a new method to construct a sheet-based level-of-detail hierarchy and smoothly blend it with volumetric information. The user guides the exploration process by moving a lens-like hotspot. Since all operations are performed on the GPU, interactive frame rates are achieved even for large meshes.