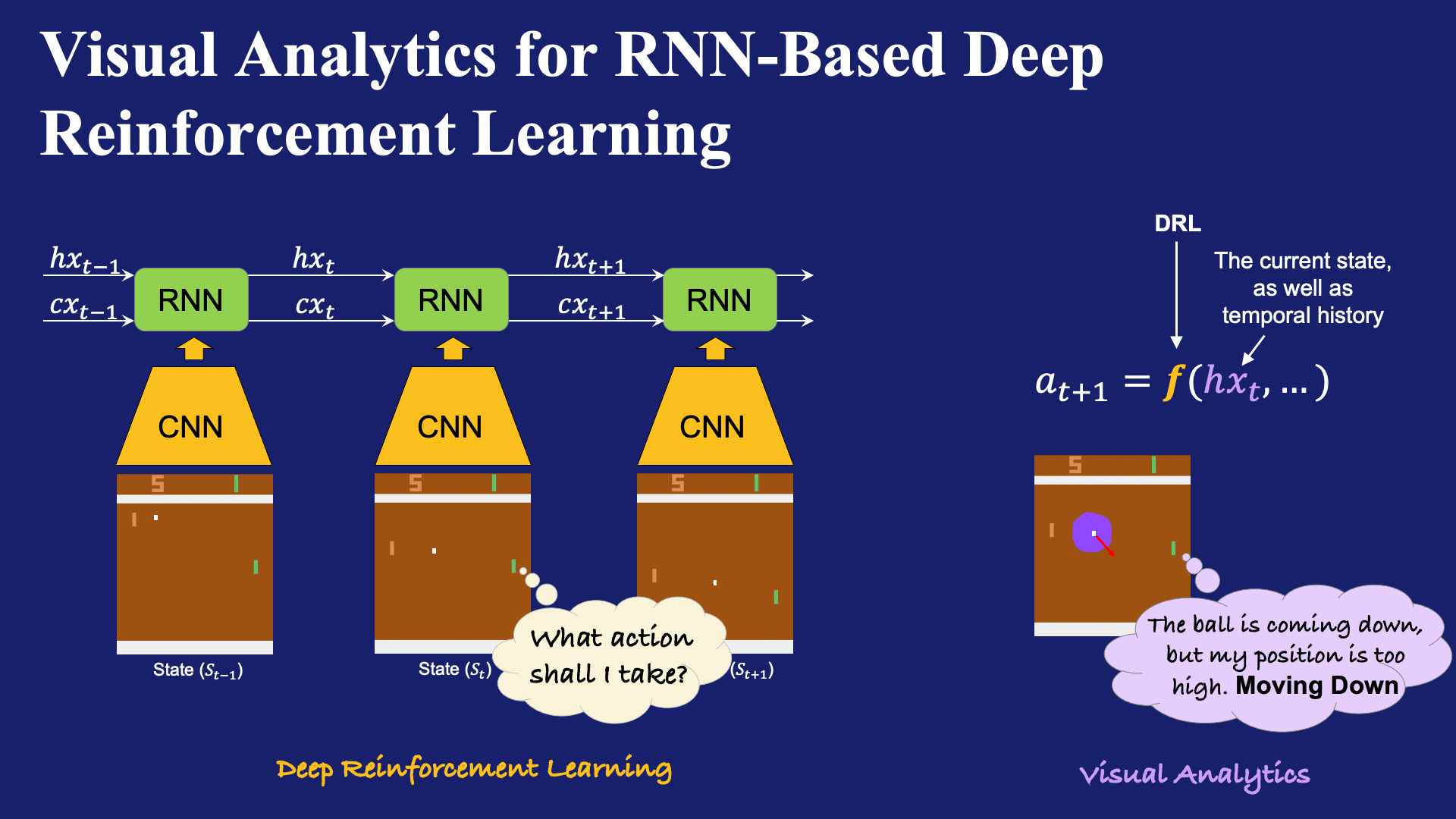

Visual Analytics for RNN-Based Deep Reinforcement Learning

Junpeng Wang, Wei Zhang, Hao Yang, Chin-Chia Yeh, Liang Wang

External link (DOI)

View presentation:2021-10-27T14:15:00ZGMT-0600Change your timezone on the schedule page

2021-10-27T14:15:00Z

Fast forward

Direct link to video on YouTube: https://youtu.be/QJrHluJ3NgQ

Keywords

Deep reinforcement learning (DRL), recurrent neural network (RNN), model interpretation, visual analytics.

Abstract

Deep reinforcement learning (DRL) targets to train an autonomous agent to interact with a pre-defined environment and strives to achieve specific goals through deep neural networks (DNN). Recurrent neural network (RNN) based DRL has demonstrated superior performance, as RNNs can effectively capture the temporal evolution of the environment and respond with proper agent actions. However, apart from the outstanding performance, little is known about how RNNs understand the environment internally and what has been memorized over time. Revealing these details is extremely important for deep learning experts to understand and improve DRLs, which in contrast, is also challenging due to the complicated data transformations inside these models. In this paper, we propose Deep Reinforcement Learning Interactive Visual Explorer (DRLIVE), a visual analytics system to effectively explore, interpret, and diagnose RNN-based DRLs. Focused on DRL agents trained for different Atari games, DRLIVE targets to accomplish three tasks: game episode exploration, RNN hidden/cell state examination, and interactive model perturbation. Using the system, one can flexibly explore a DRL agent through interactive visualizations, discover interpretable RNN cells by prioritizing RNN hidden/cell states with a set of metrics, and further diagnose the DRL model by interactively perturbing its inputs. Through concrete studies with multiple deep learning experts, we validated the efficacy of DRLIVE.