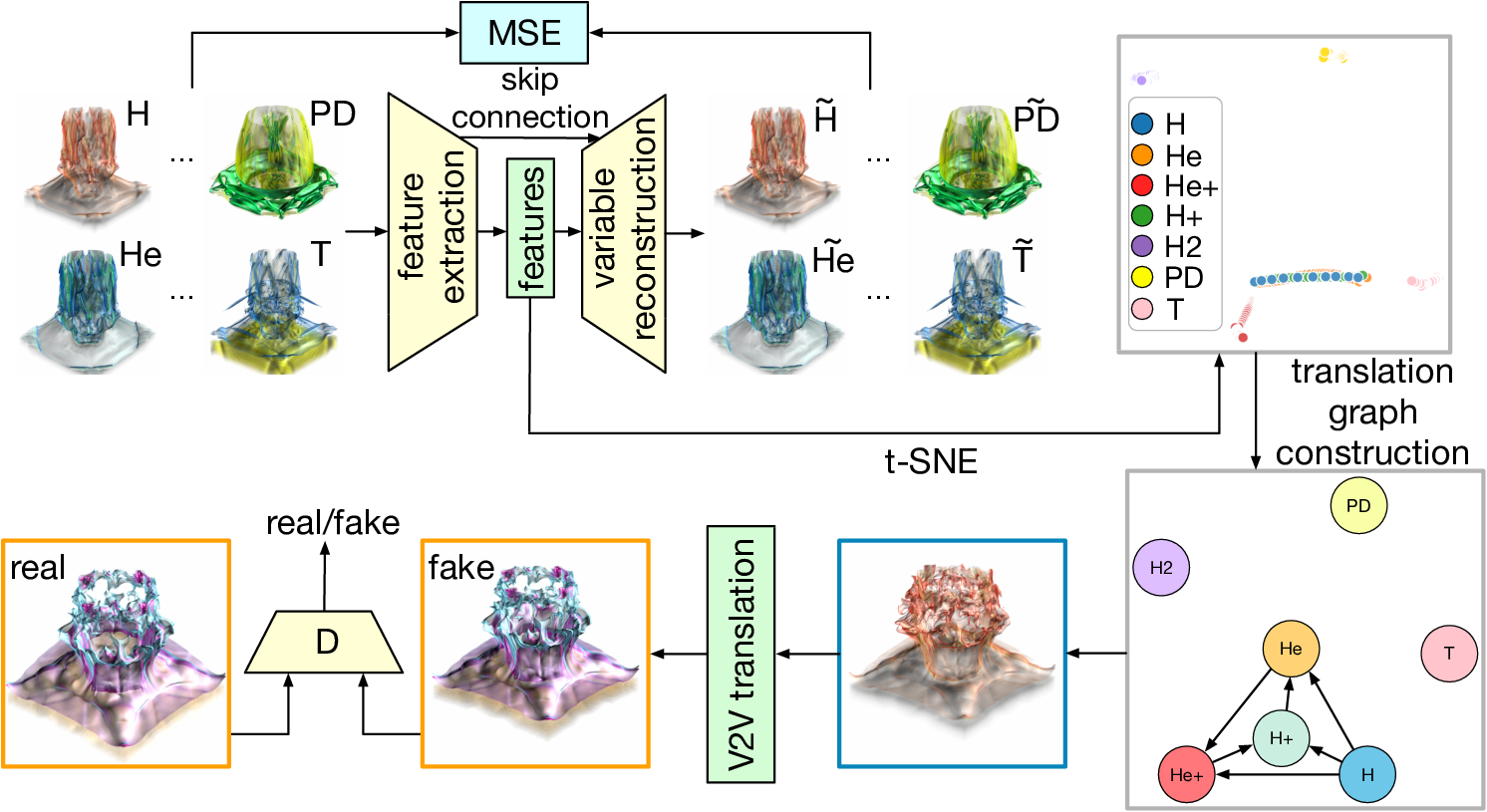

V2V: A Deep Learning Approach to Variable-to-Variable Selection and Translation for Multivariate Time-Varying Data

Jun Han, Hao Zheng, Yunhao Xing, Danny Chen, Chaoli Wang

External link (DOI)

View presentation:2020-10-29T18:30:00ZGMT-0600Change your timezone on the schedule page

2020-10-29T18:30:00Z

Fast forward

Direct link to video on YouTube: https://youtu.be/OsX3v4aUONE

Keywords

Multivariate time-varying data, variable selection and translation, generative adversarial network, data extrapolation.

Abstract

We present V2V, a novel deep learning framework, as a general-purpose solution to the variable-to-variable (V2V) selection and translation problem for multivariate time-varying data (MTVD) analysis and visualization. V2V leverages a representation learning algorithm to identify transferable variables and utilizes Kullback-Leibler divergence to determine the source and target variables. It then uses a generative adversarial network (GAN) to learn the mapping from the source variable to the target variable via the adversarial, volumetric, and feature losses. V2V takes the pairs of time steps of the source and target variable as input for training. Once trained, it can infer unseen time steps of the target variable given the corresponding time steps of the source variable. Several multivariate time-varying data sets of different characteristics are used to demonstrate the effectiveness of V2V, both quantitatively and qualitatively. We compare V2V against histogram matching and two other deep learning solutions (Pix2Pix and CycleGAN).