ConfusionFlow: A model-agnostic visualization for temporal analysis of classifier confusion

Andreas Hinterreiter, Peter Ruch, Holger Stitz, Martin Ennemoser, Jürgen Bernard, Hendrik Strobelt, Marc Streit

External link (DOI)

View presentation:2020-10-27T18:45:00ZGMT-0600Change your timezone on the schedule page

2020-10-27T18:45:00Z

Fast forward

Direct link to video on YouTube: https://youtu.be/D7twPlxGqrE

Keywords

Classification, performance analysis, time series visualization

Abstract

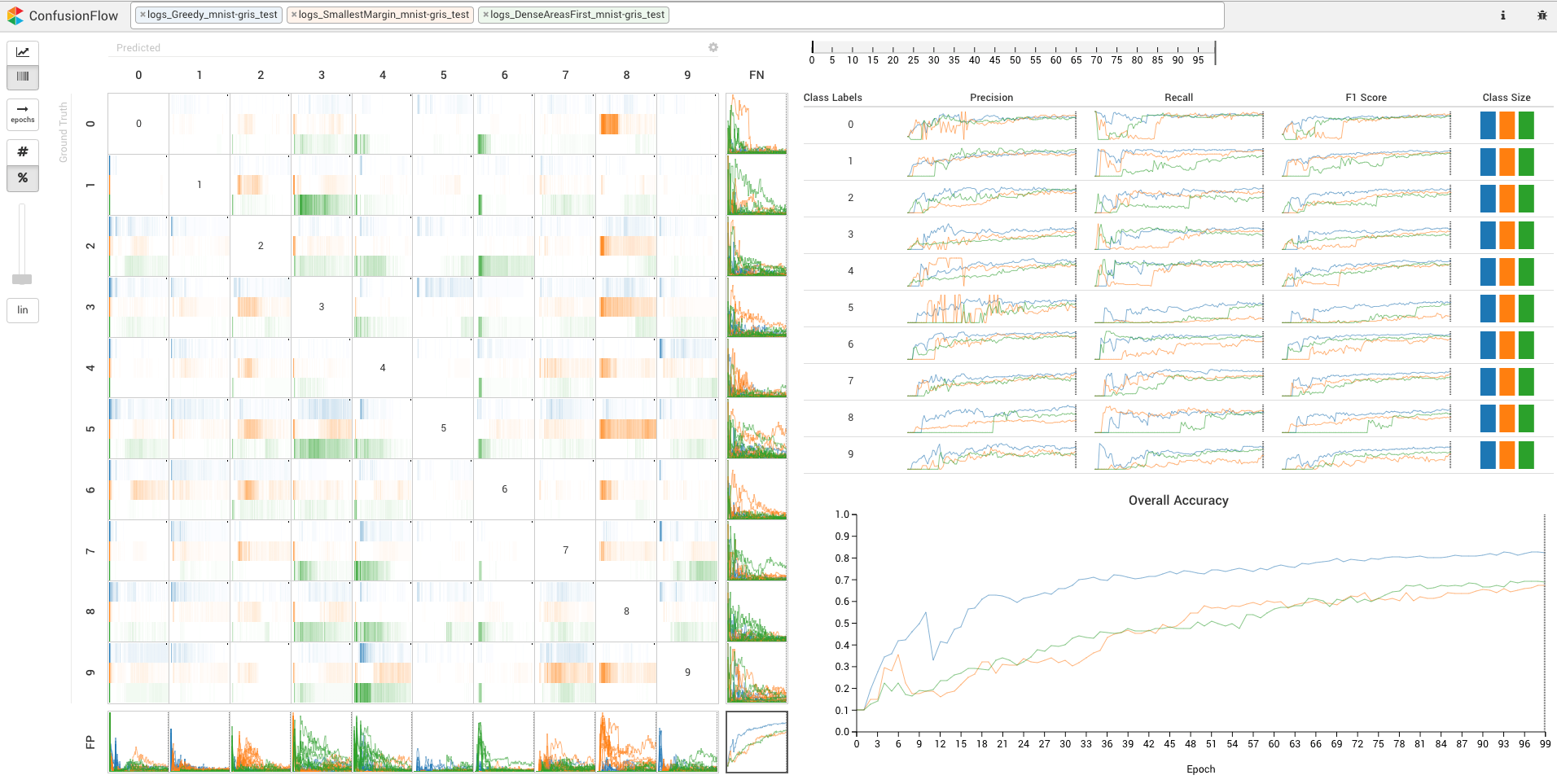

Classifiers are among the most widely used supervised machine learning algorithms. Many classification models exist, and choosing the right one for a given task is difficult. During model selection and debugging, data scientists need to assess classifiers' performances, evaluate their learning behavior over time, and compare different models. Typically, this analysis is based on single-number performance measures such as accuracy. A more detailed evaluation of classifiers is possible by inspecting class errors. The confusion matrix is an established way for visualizing these class errors, but it was not designed with temporal or comparative analysis in mind. More generally, established performance analysis systems do not allow a combined temporal and comparative analysis of class-level information. To address this issue, we propose ConfusionFlow, an interactive, comparative visualization tool that combines the benefits of class confusion matrices with the visualization of performance characteristics over time. ConfusionFlow is model-agnostic and can be used to compare performances for different model types, model architectures, and/or training and test datasets. We demonstrate the usefulness of ConfusionFlow in a case study on instance selection strategies in active learning. We further assess the scalability of ConfusionFlow and present a use case in the context of neural network pruning.