StackGenVis: Alignment of Data, Algorithms, and Models for Stacking Ensemble Learning Using Performance Metrics

Angelos Chatzimparmpas, Rafael M. Martins, Kostiantyn Kucher, Andreas Kerren

External link (DOI)

View presentation:2020-10-29T15:15:00ZGMT-0600Change your timezone on the schedule page

2020-10-29T15:15:00Z

Fast forward

Direct link to video on YouTube: https://youtu.be/9lvdgPHGfsQ

Keywords

stacking, stacked generalization, ensemble learning, visual analytics, visualization

Abstract

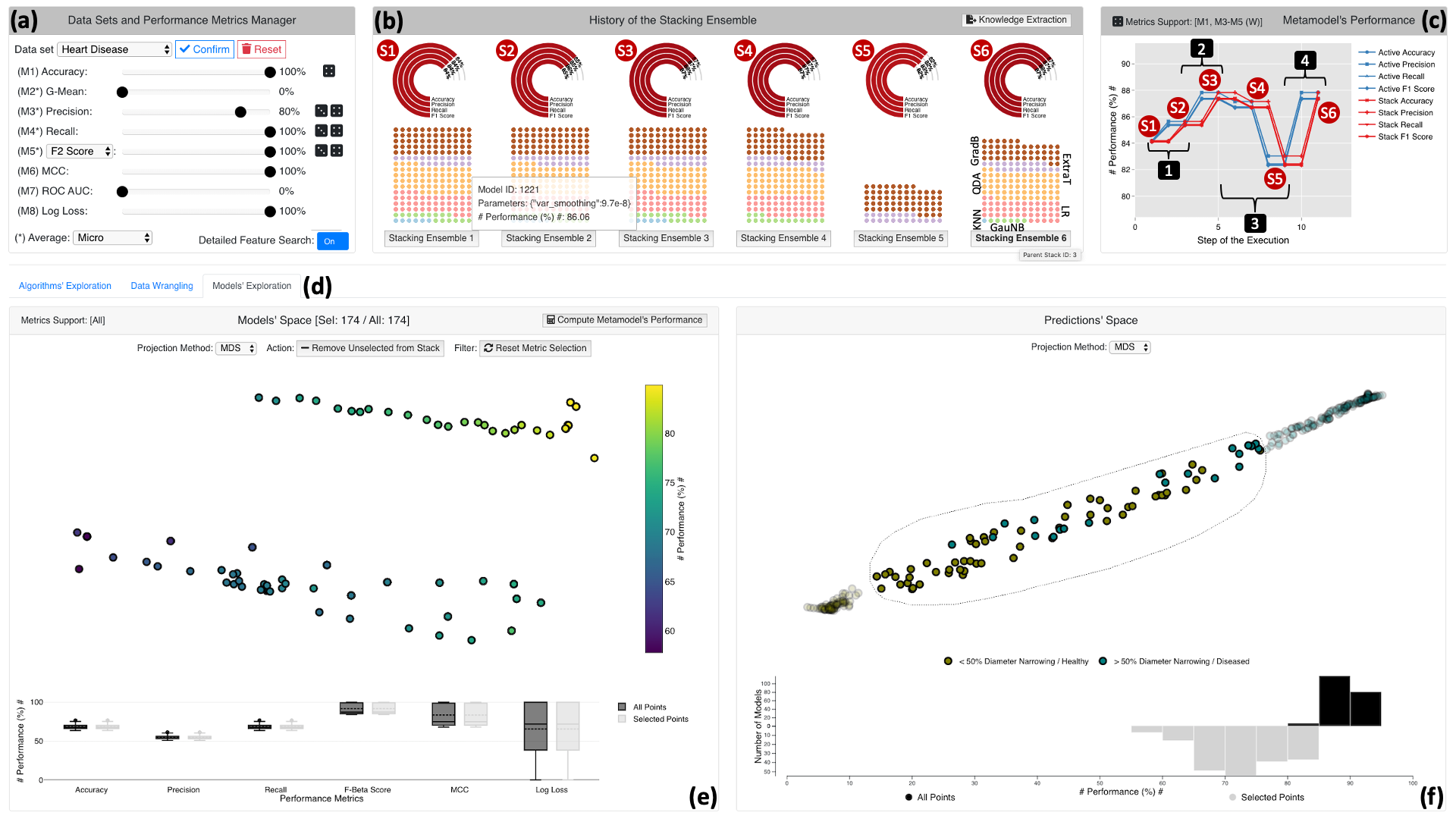

In machine learning (ML), ensemble methods—such as bagging, boosting, and stacking—are widely-established approaches that regularly achieve top-notch predictive performance. Stacking (also called “stacked generalization”) is an ensemble method that combines heterogeneous base models, arranged in at least one layer, and then employs another metamodel to summarize the predictions of those models. Although it may be a highly-effective approach for increasing the predictive performance of ML, generating a stack of models from scratch can be a cumbersome trial-and-error process. This challenge stems from the enormous space of available solutions, with different sets of data instances and features that could be used for training, several algorithms to choose from, and instantiations of these algorithms using diverse parameters (i.e., models) that perform differently according to various metrics. In this work, we present a knowledge generation model, which supports ensemble learning with the use of visualization, and a visual analytics system for stacked generalization. Our system, StackGenVis, assists users in dynamically adapting performance metrics, managing data instances, selecting the most important features for a given data set, choosing a set of top-performant and diverse algorithms, and measuring the predictive performance. In consequence, our proposed tool helps users to decide between distinct models and to reduce the complexity of the resulting stack by removing overpromising and underperforming models. The applicability and effectiveness of StackGenVis are demonstrated with two use cases: a real-world healthcare data set and a collection of data related to sentiment/stance detection in texts. Finally, the tool has been evaluated through interviews with three ML experts.