Sub-Linear Time Sampling Approach for Large-Scale Data Visualization Using Reinforcement Learning

Ayan Biswas, Arindam Bhattacharya, Yi-Tang Chen, Han-Wei Shen

Room: 106

2023-10-22T22:00:00ZGMT-0600Change your timezone on the schedule page

2023-10-22T22:00:00Z

Fast forward

Abstract

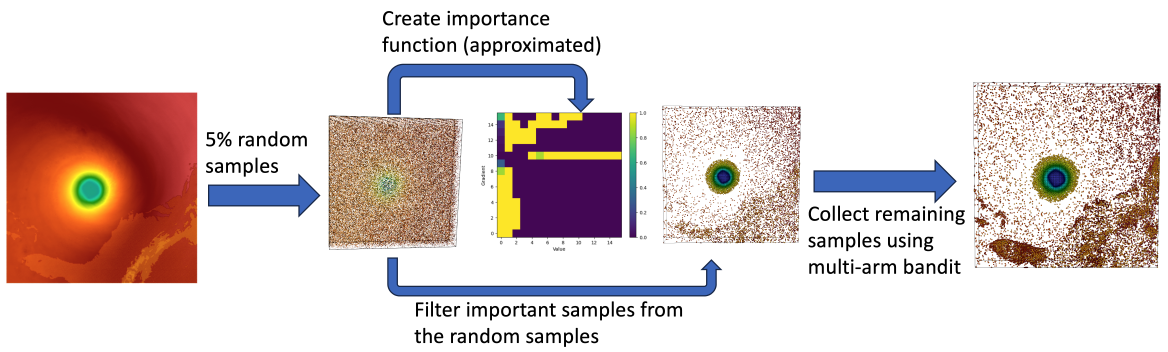

As the compute capabilities of the modern supercomputers continue to rise, domain scientists are able to run their simulations at very fine spatial and temporal resolutions. Compared to the compute speeds, the I/O bandwidth continues to lag by orders of magnitude. This necessitates that the analysis and data reduction are performed in situ while the simulation generated data is still at the supercomputer memory. Recently, intelligent data-driven sampling schemes have been proposed by visualization researchers. These sampling methods are scalable, in situ capable and able to identify the feature regions of the data. Although powerful, these sampling schemes need to traverse through the data sets at least twice, which can become problematic as the simulations start to touch the exascale capabilities. In this paper, we propose to use a reinforcement learning-based approach to devise a sub-linear time sampling algorithm. Using multi-arm bandits, we show that we can identify samples that are of similar or better quality compared to the existing methods while only touching a small fraction of the original data. We use multiple simulation data sets to show the efficacy of our proposed method.