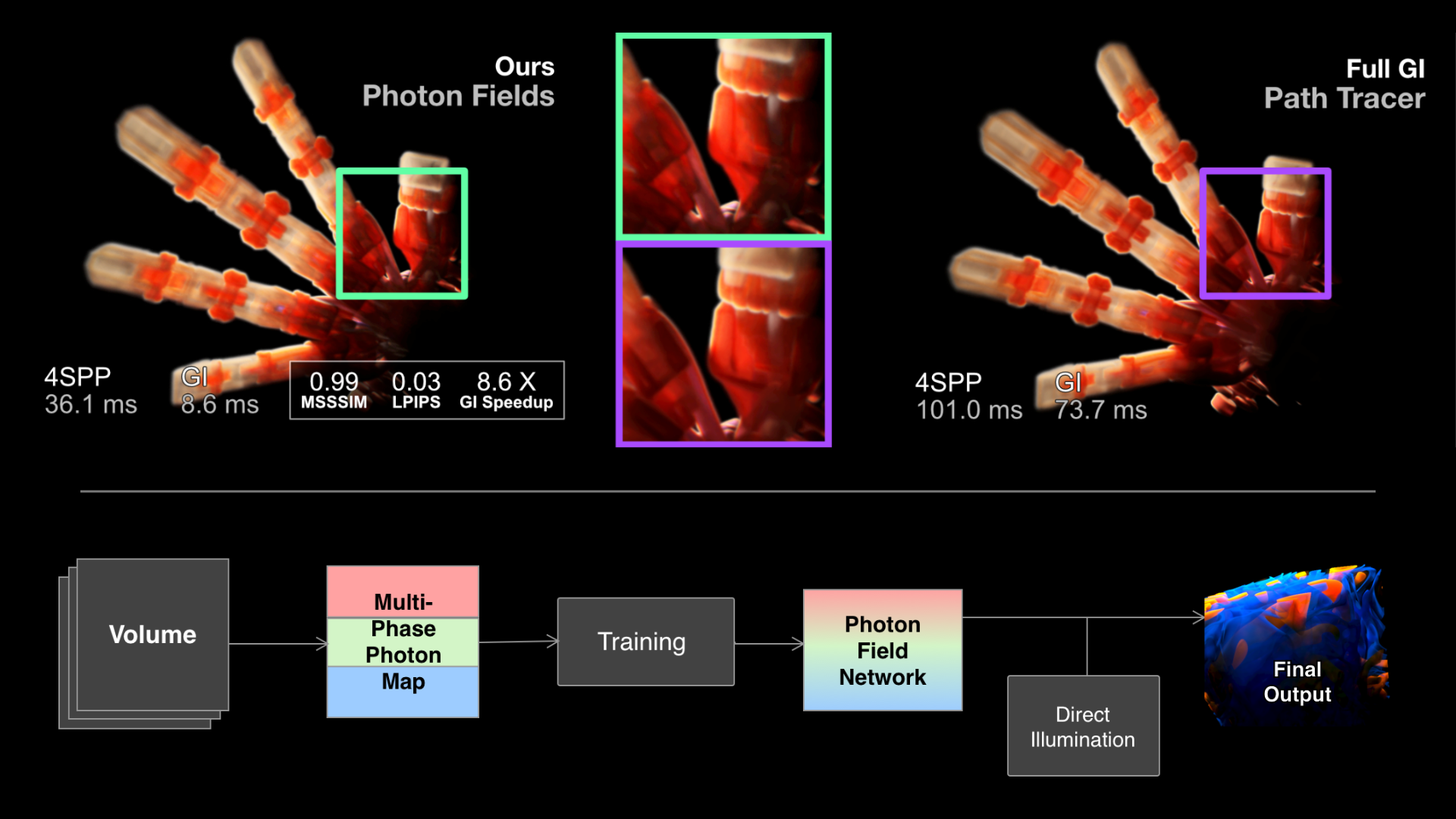

Photon Field Networks for Dynamic Real-Time Volumetric Global Illumination

David Bauer, Qi Wu, Kwan-Liu Ma

DOI: 10.1109/TVCG.2023.3327107

Room: 106

2023-10-26T00:33:00ZGMT-0600Change your timezone on the schedule page

2023-10-26T00:33:00Z

Fast forward

Full Video

Keywords

Volume data, volume rendering, volume visualization, deep learning, global illumination, neural rendering, path tracing

Abstract

Volume data is commonly found in many scientific disciplines, like medicine, physics, and biology. Experts rely on robust scientific visualization techniques to extract valuable insights from the data. Recent years have shown path tracing to be the preferred approach for volumetric rendering, given its high levels of realism. However, real-time volumetric path tracing often suffers from stochastic noise and long convergence times, limiting interactive exploration. In this paper, we present a novel method to enable real-time global illumination for volume data visualization. We develop Photon Field Networks - a phase-function-aware, multi-light neural representation of indirect volumetric global illumination. The fields are trained on multi-phase photon caches that we compute a priori. Training can be done within seconds, after which the fields can be used in various rendering tasks. To showcase their potential, we develop a custom neural path tracer, with which our photon fields achieve interactive framerates even on large datasets. We conduct in-depth evaluations of the method's performance, including visual quality, stochastic noise, inference and rendering speeds, and accuracy regarding illumination and phase function awareness. Results are compared to ray marching, path tracing and photon mapping. Our findings show that Photon Field Networks can faithfully represent indirect global illumination within the boundaries of the trained phase spectrum while exhibiting less stochastic noise and rendering at a significantly faster rate than traditional methods.