PSRFlow: Probabilistic Super Resolution with Flow-Based Models for Scientific Data

JINGYI SHEN, Han-Wei Shen

DOI: 10.1109/TVCG.2023.3327171

Room: 106

2023-10-26T00:45:00ZGMT-0600Change your timezone on the schedule page

2023-10-26T00:45:00Z

Fast forward

Full Video

Keywords

Super resolution, latent space, normalizing flow, uncertainty visualization

Abstract

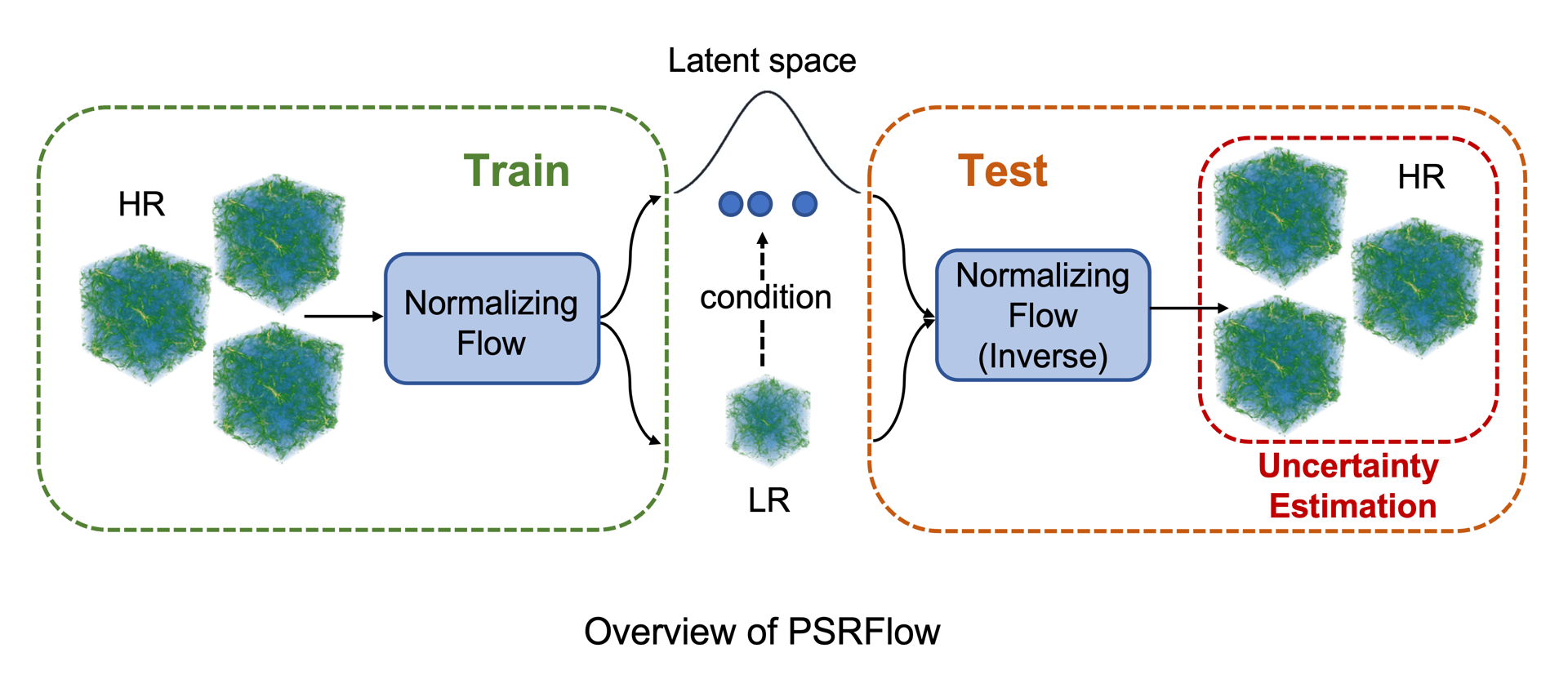

Although many deep-learning-based super-resolution approaches have been proposed in recent years, because no ground truth is available in the inference stage, few can quantify the errors and uncertainties of the super-resolved results. For scientific visualization applications, however, conveying uncertainties of the results to scientists is crucial to avoid generating misleading or incorrect information. In this paper, we propose PSRFlow, a novel normalizing flow-based generative model for scientific data super-resolution that incorporates uncertainty quantification into the super-resolution process. PSRFlow learns the conditional distribution of the high-resolution data based on the low-resolution counterpart. By sampling from a Gaussian latent space that captures the missing information in the high-resolution data, one can generate different plausible super-resolution outputs. The efficient sampling in the Gaussian latent space allows our model to perform uncertainty quantification for the super-resolved results. During model training, we augment the training data with samples across various scales to make the model adaptable to data of different scales, achieving flexible super-resolution for a given input. Our results demonstrate superior performance and robust uncertainty quantification compared with existing methods such as interpolation and GAN-based super-resolution networks.