Guided Visual Analytics for Image Selection in Time and Space

Ignacio Pérez-Messina, Davide Ceneda, Silvia Miksch

DOI: 10.1109/TVCG.2023.3326572

Room: 103

2023-10-24T22:00:00ZGMT-0600Change your timezone on the schedule page

2023-10-24T22:00:00Z

Fast forward

Full Video

Keywords

Application Motivated Visualization, Geospatial Data, Mixed Initiative Human-Machine Analysis, Process/Workflow Design, Task Abstractions & Application Domains, Temporal Data

Abstract

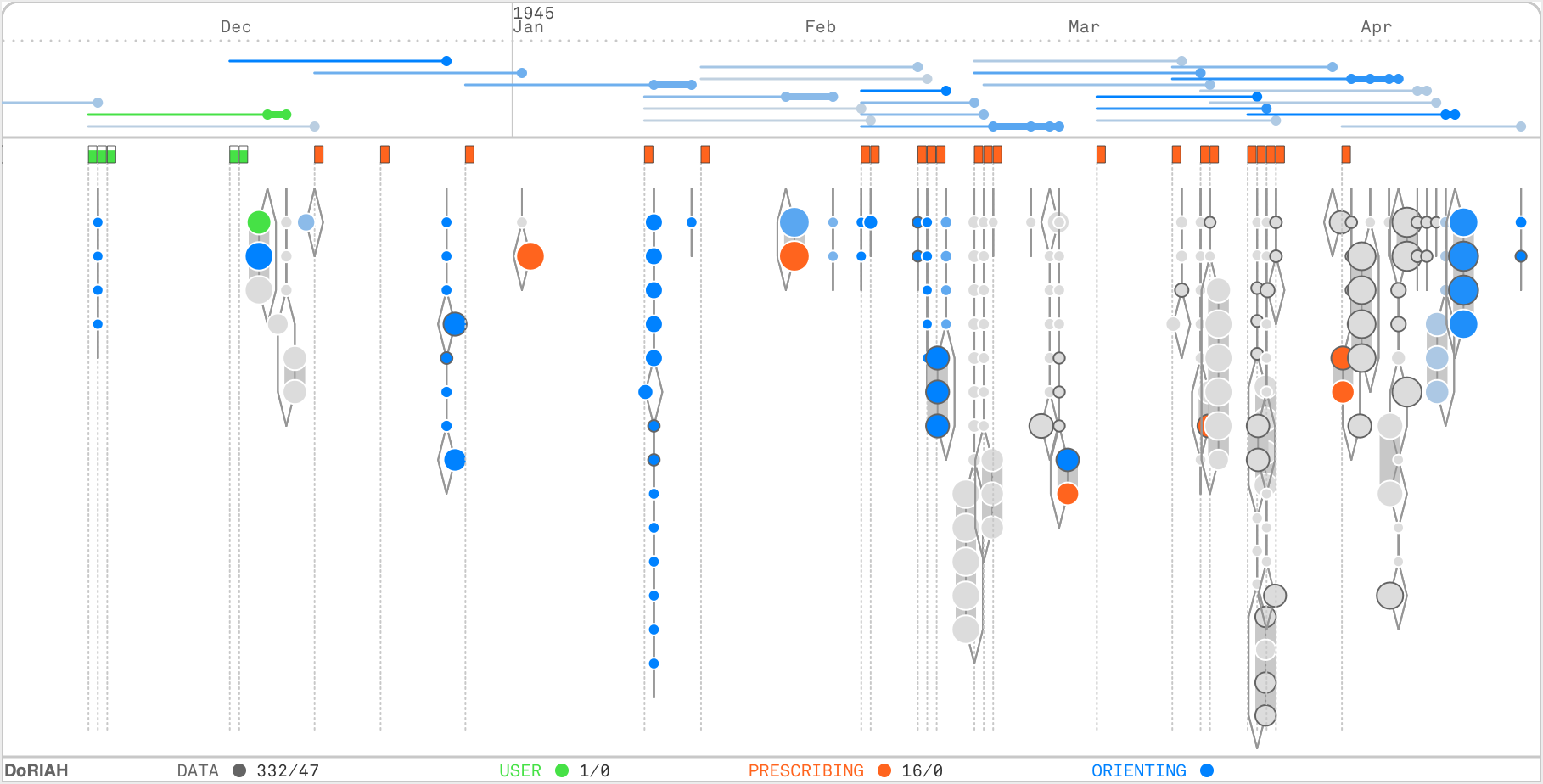

Unexploded Ordnance (UXO) detection, the identification of remnant active bombs buried underground from archival aerial images, implies a complex workflow involving decision-making at each stage. An essential phase in UXO detection is the task of image selection, where a small subset of images must be chosen from archives to reconstruct an area of interest (AOI) and identify craters. The selected image set must comply with good spatial and temporal coverage over the AOI, particularly in the temporal vicinity of recorded aerial attacks, and do so with minimal images for resource optimization. This paper presents a guidance-enhanced visual analytics prototype to select images for UXO detection. In close collaboration with domain experts, our design process involved analyzing user tasks, eliciting expert knowledge, modeling quality metrics, and choosing appropriate guidance. We report on a user study with two real-world scenarios of image selection performed with and without guidance. Our solution was well-received and deemed highly usable. Through the lens of our task-based design and developed quality measures, we observed guidance-driven changes in user behavior and improved quality of analysis results. An expert evaluation of the study allowed us to improve our guidance-enhanced prototype further and discuss new possibilities for user-adaptive guidance.