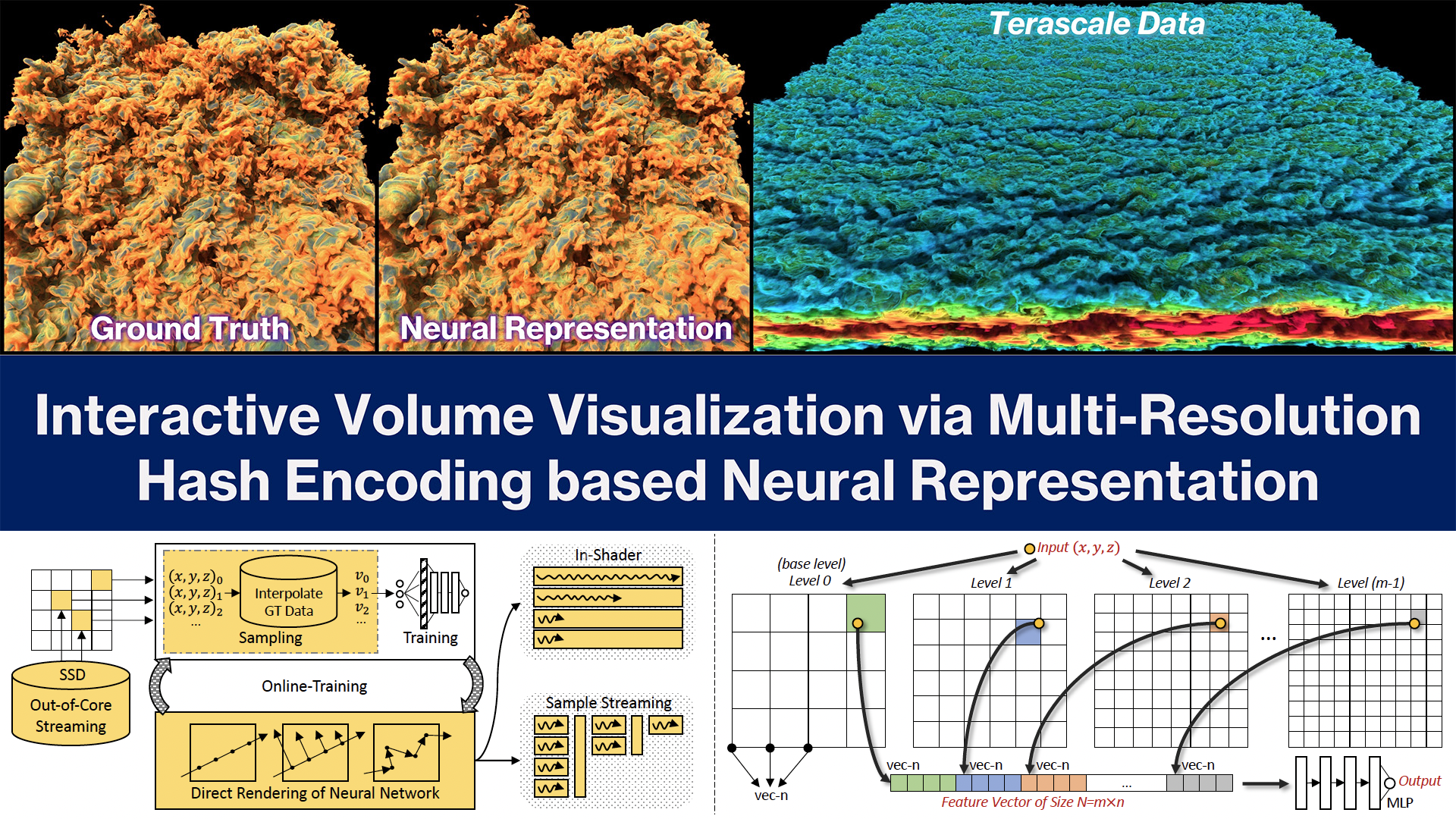

Interactive Volume Visualization via Multi-Resolution Hash Encoding based Neural Representation

Qi Wu, David Bauer, Michael J. Doyle, Kwan-Liu Ma

DOI: 10.1109/TVCG.2023.3293121

Room: 106

2023-10-26T00:09:00ZGMT-0600Change your timezone on the schedule page

2023-10-26T00:09:00Z

Fast forward

Full Video

Keywords

Implicit neural representation;path tracing;ray marching;volume visualization

Abstract

Neural networks have shown great potential in compressing volume data for visualization. However, due to the high cost of training and inference, such volumetric neural representations have thus far only been applied to offline data processing and non-interactive rendering. In this paper, we demonstrate that by simultaneously leveraging modern GPU tensor cores, a native CUDA neural network framework, and a well-designed rendering algorithm with macro-cell acceleration, we can interactively ray trace volumetric neural representations (10-60fps). Our neural representations are also high-fidelity (PSNR > 30dB) and compact (10-1000x smaller). Additionally, we show that it is possible to fit the entire training step inside a rendering loop and skip the pre-training process completely. To support extreme-scale volume data, we also develop an efficient out-of-core training strategy, which allows our volumetric neural representation training to potentially scale up to terascale using only an NVIDIA RTX 3090 workstation.