When, Where and How does it fail? A Spatial-temporal Visual Analytics Approach for Interpretable Object Detection in Autonomous Driving

Junhong Wang, Yun Li, Zhaoyu Zhou, Chengshun Wang, Yijie Hou, Li Zhang, Xiangyang Xue, Michael Kamp, Xiaolong (Luke) Zhang, Siming Chen

DOI: 10.1109/TVCG.2022.3201101

Room: 105

2023-10-26T22:36:00ZGMT-0600Change your timezone on the schedule page

2023-10-26T22:36:00Z

Fast forward

Full Video

Keywords

Autonomous driving;spatial-temporal visual analytics;interpretability

Abstract

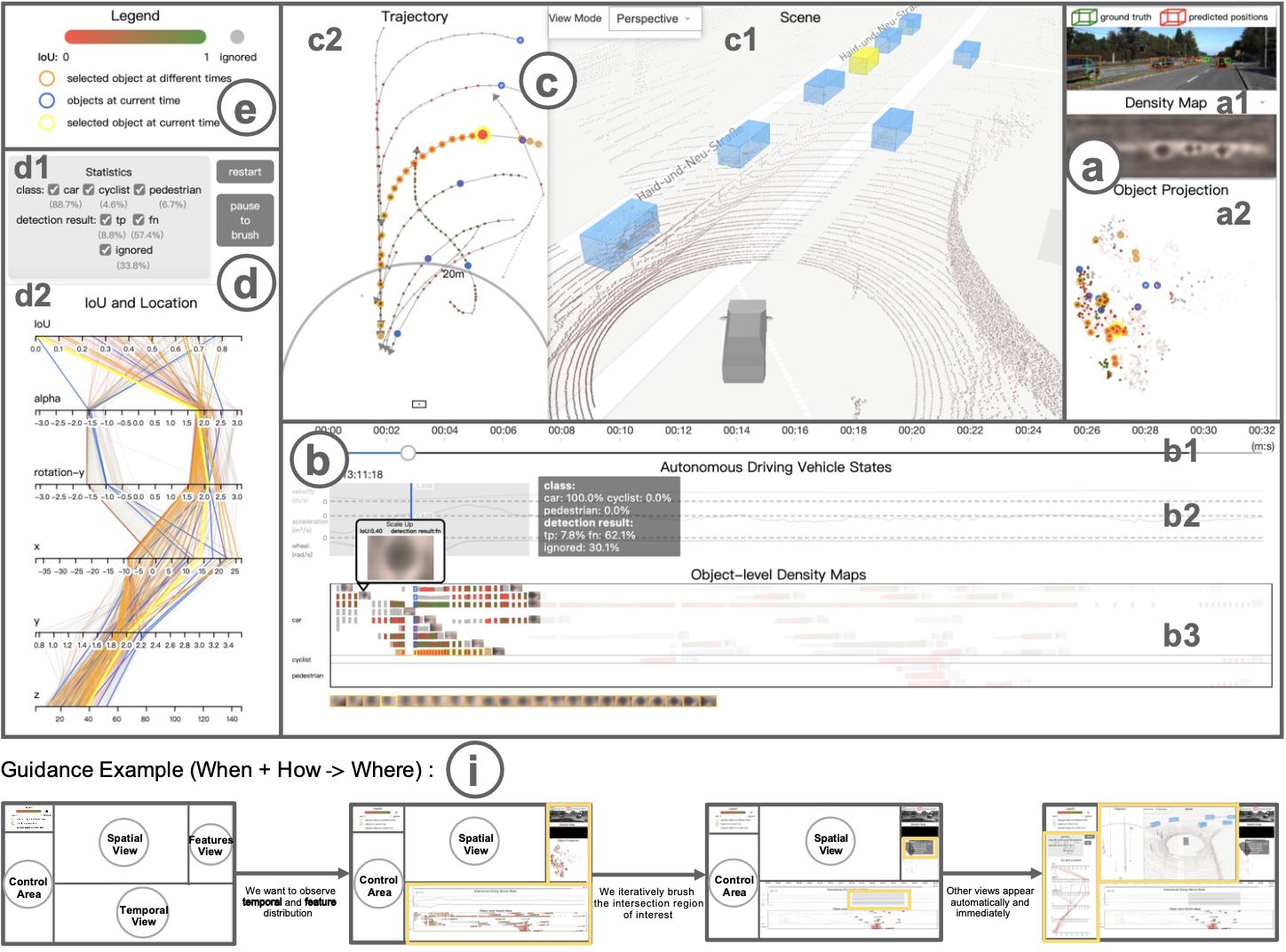

Arguably the most representative application of artificial intelligence, autonomous driving systems usually rely on computer vision techniques to detect the situations of the external environment. Object detection underpins the ability of scene understanding in such systems. However, existing object detection algorithms often behave as a black box, so when a model fails, no information is available on When, Where and How the failure happened. In this paper, we propose a visual analytics approach to help model developers interpret the model failures. The system includes the micro- and macro-interpreting modules to address the interpretability problem of object detection in autonomous driving. The micro-interpreting module extracts and visualizes the features of a convolutional neural network (CNN) algorithm with density maps, while the macro-interpreting module provides spatial-temporal information of an autonomous driving vehicle and its environment. With the situation awareness of the spatial, temporal and neural network information, our system facilitates the understanding of the results of object detection algorithms, and helps the model developers better understand, tune and develop the models. We use real-world autonomous driving data to perform case studies by involving domain experts in computer vision and autonomous driving to evaluate our system. The results from our interviews with them show the effectiveness of our approach.