Visual Exploration of Machine Learning Model Behavior with Hierarchical Surrogate Rule Sets

Jun Yuan, Brian Barr, Kyle Overton, Enrico Bertini

DOI: 10.1109/TVCG.2022.3219232

Room: 109

2023-10-25T05:09:00ZGMT-0600Change your timezone on the schedule page

2023-10-25T05:09:00Z

Fast forward

Full Video

Keywords

visualization;rule set;surrogate model;model understanding

Abstract

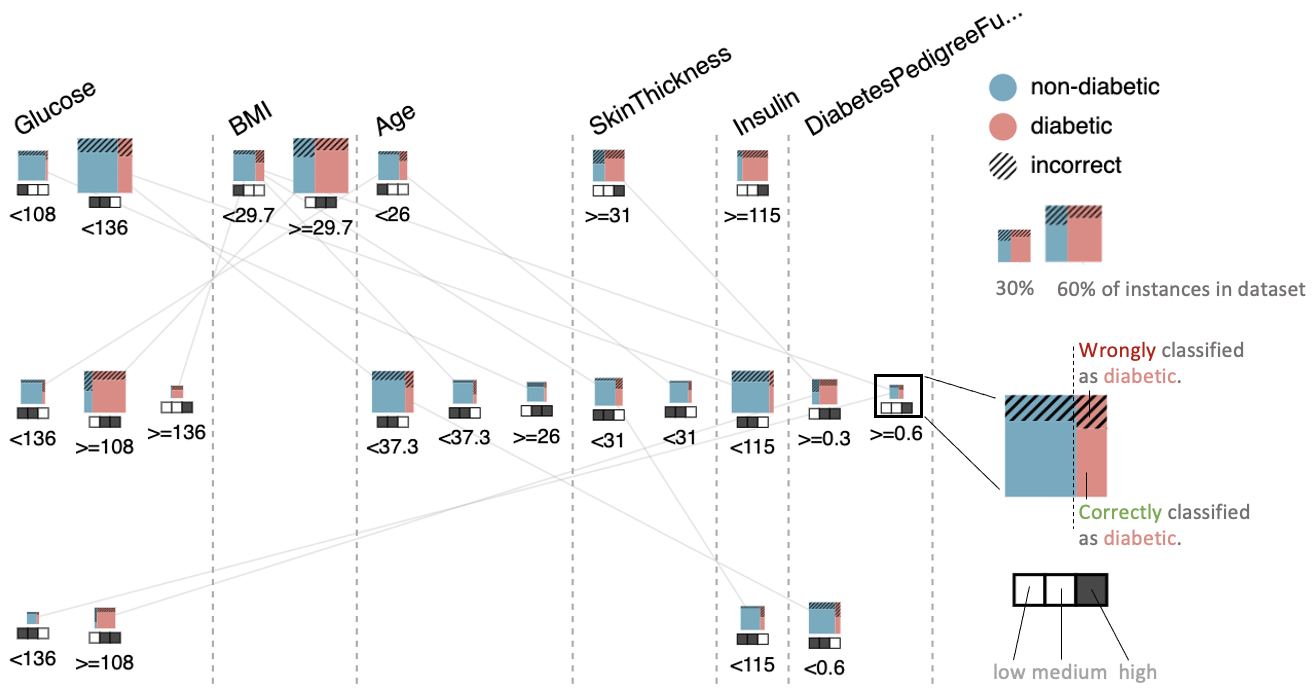

One of the potential solutions for model interpretation is to train a surrogate model: a more transparent model that approximates the behavior of the model to be explained. Typically, classification rules or decision trees are used due to their logic-based expressions. However, decision trees can grow too deep, and rule sets can become too large to approximate a complex model. Unlike paths on a decision tree that must share ancestor nodes (conditions), rules are more flexible. However, the unstructured visual representation of rules makes it hard to make inferences across rules. In this paper, we focus on tabular data and present novel algorithmic and interactive solutions to address these issues. First, we present Hierarchical Surrogate Rules (HSR), an algorithm that generates hierarchical rules based on user-defined parameters. We also contribute SURE, a visual analytics (VA) system that integrates HSR and an interactive surrogate rule visualization, the Feature-Aligned Tree, which depicts rules as trees while aligning features for easier comparison. We evaluate the algorithm in terms of parameter sensitivity, time performance, and comparison with surrogate decision trees and find that it scales reasonably well and overcomes the shortcomings of surrogate decision trees. We evaluate the visualization and the system through a usability study and an observational study with domain experts. Our investigation shows that the participants can use feature-aligned trees to perform non-trivial tasks with very high accuracy. We also discuss many interesting findings, including a rule analysis task characterization, that can be used for visualization design and future research.