Beyond Generating Code: Evaluating GPT on a Data Visualization Course

Zhutian Chen, Chenyang Zhang, Qianwen Wang, Jakob Troidl, Simon Alexander Warchol, Johanna Beyer, Nils Gehlenborg, Hanspeter Pfister

Room: 109

2023-10-22T22:00:00ZGMT-0600Change your timezone on the schedule page

2023-10-22T22:00:00Z

Abstract

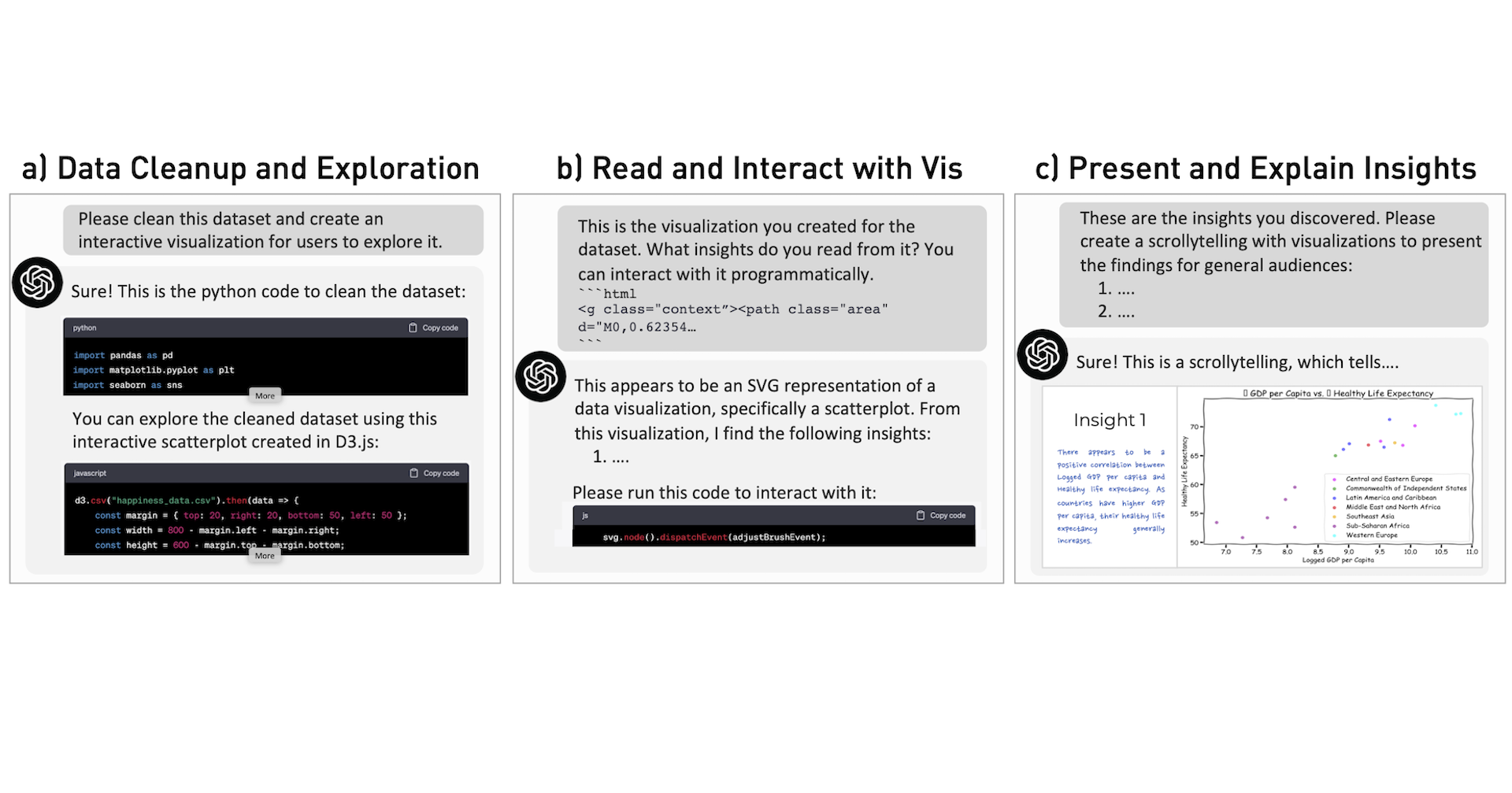

This paper presents an empirical evaluation of the performance of the Generative Pre-trained Transformer (GPT) model in Harvard’s CS171 data visualization course. While previous studies have focused on GPT’s ability to generate code for visualizations, this study goes beyond code generation to evaluate GPT’s abilities in various visualization tasks, such as data interpretation, visualization design, visual data exploration, and insight communication. The evaluation utilized GPT-3.5 and GPT-4 through the APIs of OpenAI to complete assignments of CS171, and included a quantitative assessment based on the established course rubrics, a qualitative analysis informed by the feedback of three experienced graders, and an exploratory study of GPT’s capabilities in completing border visualization tasks. Findings show that GPT-4 scored 80% on quizzes and homework, and Teaching Fellows could distinguish between GPT- and human-generated homework with 70% accuracy. The study also demonstrates GPT’s potential in completing various visualization tasks, such as data cleanup, interaction with visualizations, and insight communication. The paper concludes by discussing the strengths and limitations of GPT in data visualization, potential avenues for incorporating GPT in broader visualization tasks, and the need to redesign visualization education.