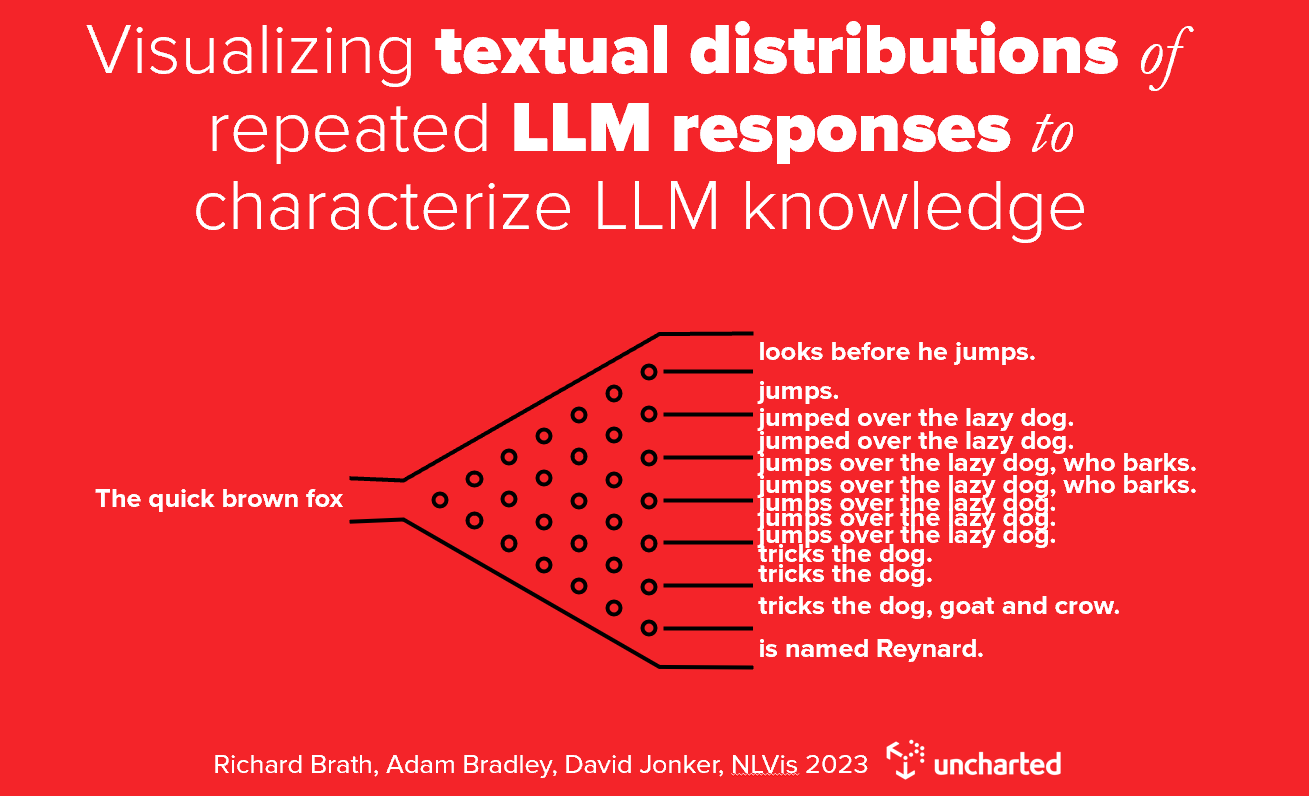

Visualizing textual distributions of repeated LLM responses to characterize LLM knowledge

Richard Brath, Adam James Bradley, David Jonker

Room: 110

2023-10-22T03:00:00ZGMT-0600Change your timezone on the schedule page

2023-10-22T03:00:00Z

Fast forward

Abstract

The breadth and depth of knowledge learned by Large Language Models (LLMs) can be assessed through repetitive prompting and visual analysis of commonality across the responses. We show levels of LLM verbatim completions of prompt text through aligned responses, mind-maps of knowledge across several areas in general topics, and an association graph of topics generated directly from recursive prompting of the LLM.