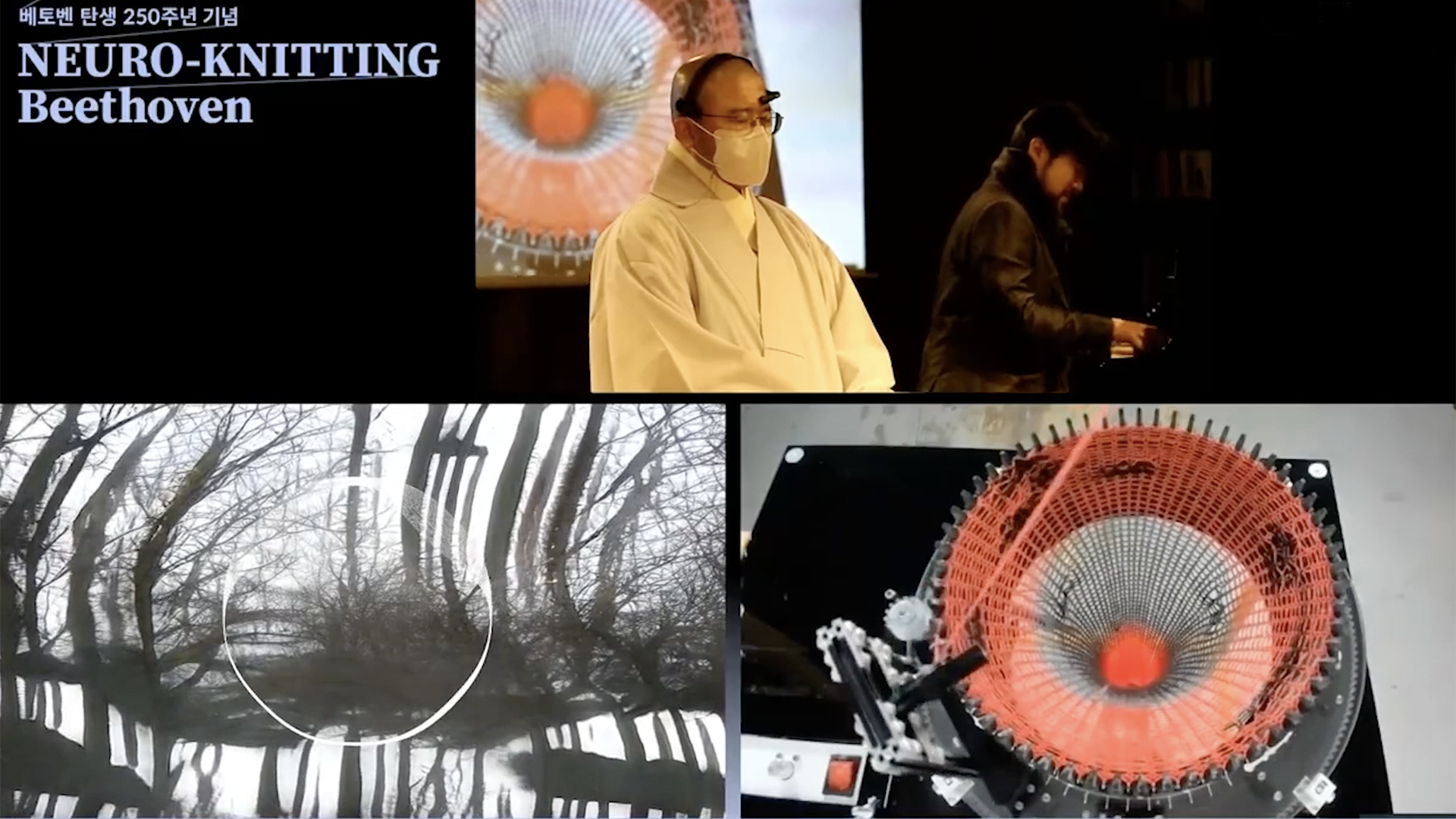

Neuroknitting Beethoven

varvara guljajeva, Mar Canet Sola

View presentation:2022-10-18T23:40:00ZGMT-0600Change your timezone on the schedule page

2022-10-18T23:40:00Z

The live footage of the talk, including the Q&A, can be viewed on the session page, VISAP Opening Reception (6:00pm-8:00pm) | -- Art Exhibit Location: Automobile Alley.

Abstract

NeuroKnitting Beethoven was developed to celebrate Ludwig van Beethoven’s 250th anniversary and to re-visit the composer’s classical compositions from an interdisciplinary viewpoint. Suddenly, the public could hear not only an artistic interpretation of the composition but also the musician's emotional state, which has resulted in the movement of a circular knitting machine installation, visuals, and plotted pattern to the produced garment in real-time. It means the pianist’s (in Seoul’s performance, it was a monk) affective response to music was captured every second and memorized in the knitted textile pattern, which was sprayed on the yarn before being knitted. High attention level resulted in a dense pattern, and the knitting machine’s speed followed the meditation level. All these processes were real-time and took place simultaneously. Furthermore, the sound-responsive AI-generated visuals were created and displayed alongside the data visualization to accompany brain data visualizations.