Multi-View Design Patterns and Responsive Visualization for Genomics Data

Sehi L'Yi, Nils Gehlenborg

View presentation:2022-10-19T20:45:00ZGMT-0600Change your timezone on the schedule page

2022-10-19T20:45:00Z

Prerecorded Talk

The live footage of the talk, including the Q&A, can be viewed on the session page, DNA/Genome and Molecular Data/Vis.

Fast forward

Abstract

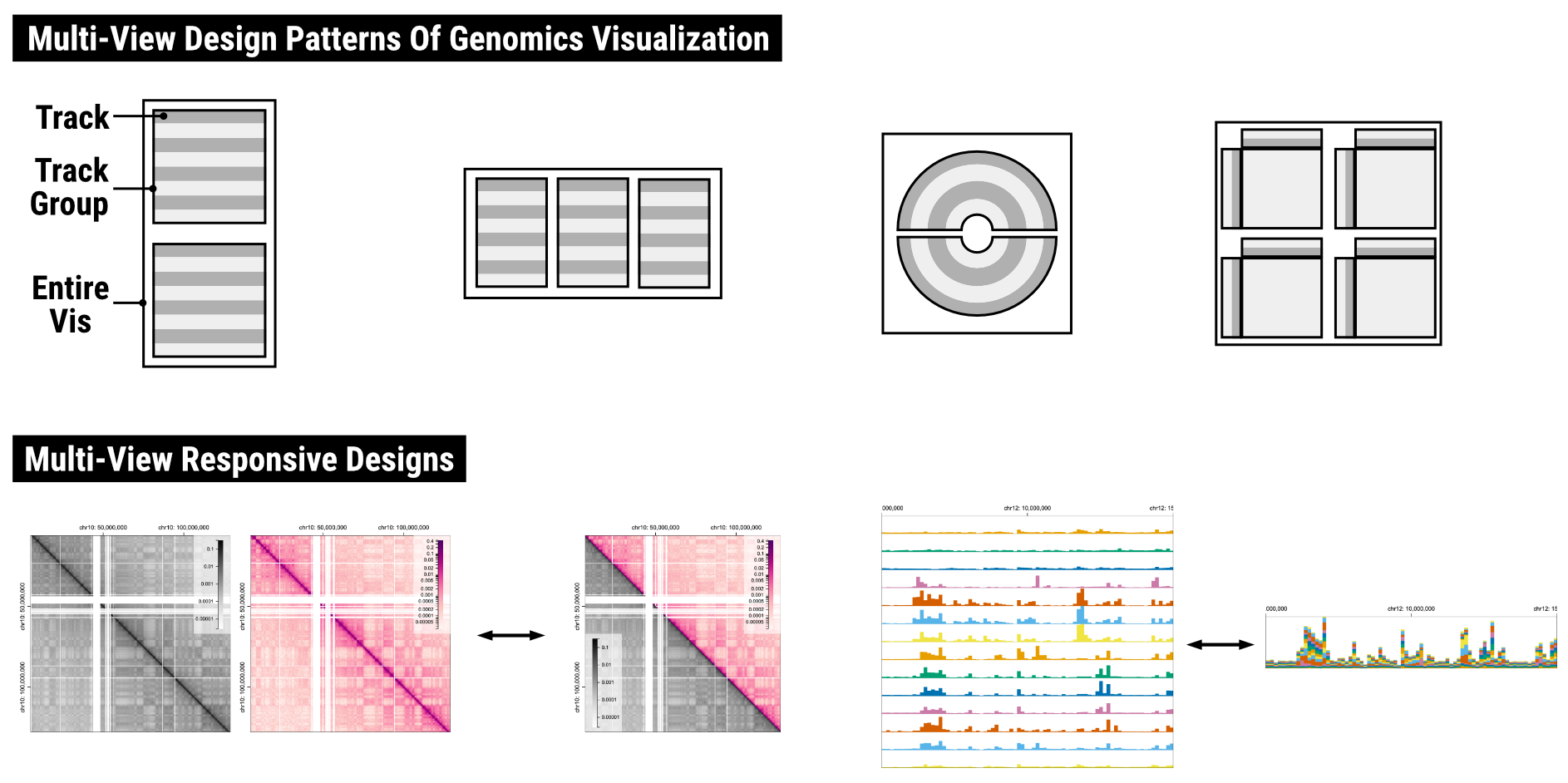

A series of recent studies has focused on designing cross-resolution and cross-device visualizations, i.e., responsive visualization, a concept adopted from responsive web design. However, these studies mainly focused on visualizations with a single view to a small number of views, and there are still unresolved questions about how to design responsive multi-view visualizations. In this paper, we present a reusable and generalizable framework for designing responsive multi-view visualizations focused on genomics data. To gain a better understanding of existing design challenges, we review web-based genomics visualization tools in the wild. By characterizing tools based on a taxonomy of responsive designs, we find that responsiveness is rarely supported in existing tools. To distill insights from the survey results in a systematic way, we classify typical view composition patterns, such as “vertically long,” “horizontally wide,” “circular,” and “cross-shaped” compositions. We then identify their usability issues in different resolutions that stem from the composition patterns, as well as discussing approaches to address the issues and to make genomics visualizations responsive. By extending the Gosling visualization grammar to support responsive constructs, we show how these approaches can be supported. A valuable follow-up study would be taking different input modalities into account, such as mouse and touch interactions, which was not considered in our study.