Towards Natural Language-Based Visualization Authoring

Yun Wang, Zhitao Hou, Jiaqi Wang, Tongshuang Wu, Leixian Shen, He Huang, Haidong Zhang, Dongmei Zhang

View presentation:2022-10-21T14:48:00ZGMT-0600Change your timezone on the schedule page

2022-10-21T14:48:00Z

Prerecorded Talk

The live footage of the talk, including the Q&A, can be viewed on the session page, Natural Language Interaction.

Fast forward

Abstract

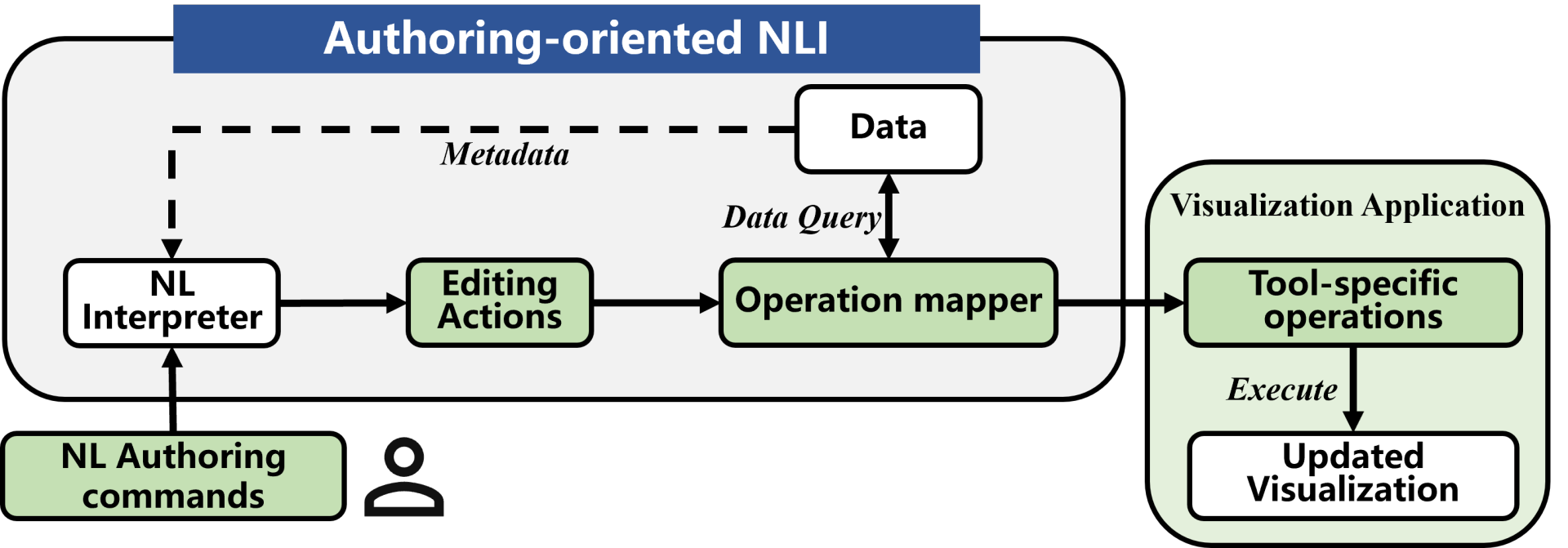

A key challenge to visualization authoring is the process of getting familiar with the complex user interfaces of authoring tools. Natural Language Interface (NLI) presents promising benefits due to its learnability and usability. However, supporting NLIs for authoring tools requires expertise in natural language processing, while existing NLIs are mostly designed for visual analytic workflow. In this paper, we propose an authoring-oriented NLI pipeline by introducing a structured representation of users’ visualization editing intents, called editing actions, based on a formative study and an extensive survey on visualization construction tools. The editing actions are executable, and thus decouple natural language interpretation and visualization applications as an intermediate layer. We implement a deep learning-based NL interpreter to translate NL utterances into editing actions. The interpreter is reusable and extensible across authoring tools. The authoring tools only need to map the editing actions into tool-specific operations. To illustrate the usages of the NL interpreter, we implement an Excel chart editor and a proof-of-concept authoring tool, VisTalk. We conduct a user study with VisTalk to understand the usage patterns of NL-based authoring systems. Finally, we discuss observations on how users author charts with natural language, as well as implications for future research.