Calibrate: Interactive Analysis of Probabilistic Model Output

Peter Xenopoulos, João Rulff, Luis Gustavo Nonato, Brian Barr, Claudio Silva

View presentation:2022-10-20T16:33:00ZGMT-0600Change your timezone on the schedule page

2022-10-20T16:33:00Z

Prerecorded Talk

The live footage of the talk, including the Q&A, can be viewed on the session page, Interpreting Machine Learning.

Fast forward

Abstract

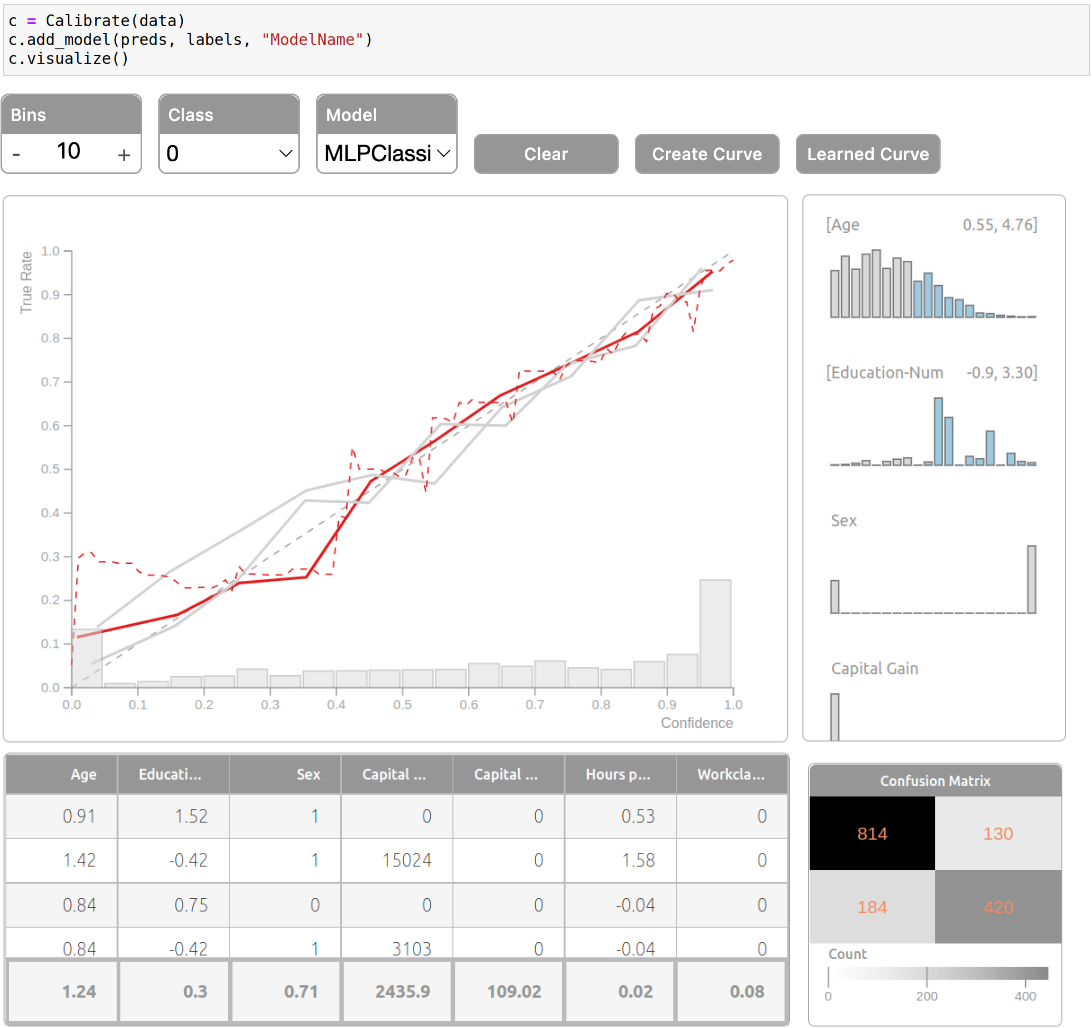

Analyzing classification model performance is a crucial task for machine learning practitioners. While practitioners often use count-based metrics derived from confusion matrices, like accuracy, many applications, such as weather prediction, sports betting, or patient risk prediction, rely on a classifier's predicted probabilities rather than predicted labels. In these instances, practitioners are concerned with producing a calibrated model, that is, one which outputs probabilities that reflect those of the true distribution. Model calibration is often analyzed visually, through static reliability diagrams, however, the traditional calibration visualization may suffer from a variety of drawbacks due to the strong aggregations it necessitates. Furthermore, count-based approaches are unable to sufficiently analyze model calibration. We present Calibrate, an interactive reliability diagram that addresses the aforementioned issues. Calibrate constructs a reliability diagram that is resistant to drawbacks in traditional approaches, and allows for interactive subgroup analysis and instance-level inspection. We demonstrate the utility of Calibrate through use cases on both real-world and synthetic data. We further validate Calibrate by presenting the results of a think-aloud experiment with data scientists who routinely analyze model calibration.