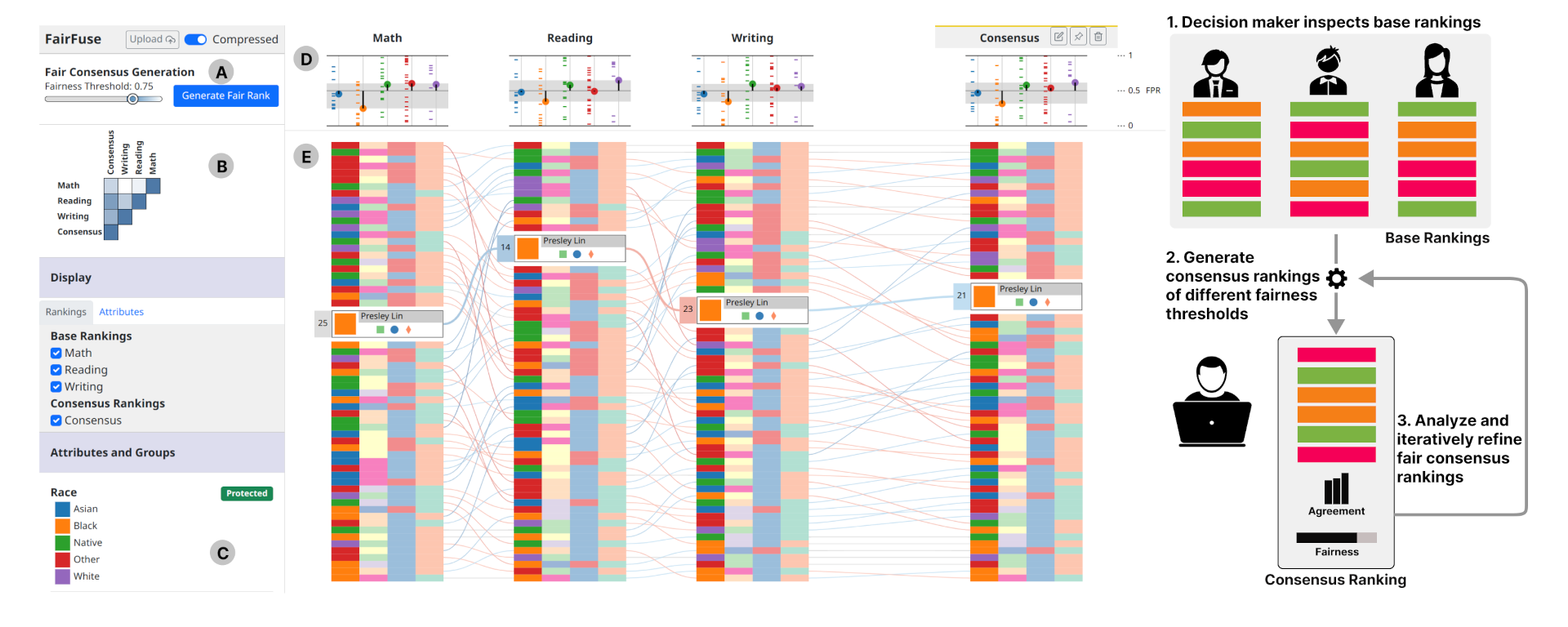

FairFuse: Interactive Visual Support for Fair Consensus Ranking

Hilson Shrestha, Kathleen Cachel, Mallak Alkhathlan, Elke A Rundensteiner, Lane Harrison

View presentation:2022-10-19T21:21:00ZGMT-0600Change your timezone on the schedule page

2022-10-19T21:21:00Z

Prerecorded Talk

The live footage of the talk, including the Q&A, can be viewed on the session page, Visual Analytics, Decision Support, and Machine Learning.

Fast forward

Keywords

Fairness, consensus, rank aggregation, visualization

Abstract

Fair consensus building combines the preferences of multiple rankers into a single consensus ranking, while ensuring any ranked candidate group defined by a protected attribute (such as race or gender) is not disadvantaged compared to other groups. Manually generating a fair consensus ranking is time-consuming and impractical— even for a fairly small number of candidates. While algorithmic approaches for auditing and generating fair consensus rankings have been developed recently, these have not been operationalized in interactive systems. To bridge this gap, we introduce FairFuse, a visualization-enabled tool for generating, analyzing, and auditing fair consensus rankings. In developing FairFuse, we construct a data model which includes several base rankings entered by rankers, augmented with measures of group fairness, algorithms for generating consensus rankings with varying degrees of fairness, and other fairness and rank-related capabilities. We design novel visualizations that encode these measures in a parallel-coordinates style rank visualization, with interactive capabilities for generating and exploring fair consensus rankings. We provide case studies in which FairFuse supports a decision-maker in ranking scenarios in which fairness is important. Finally, we discuss emerging challenges for future efforts supporting fairness-oriented rank analysis; including handling intersectionality, defined by multiple protected attributes, and the need for user studies targeting peoples’ perceptions and use of fairness oriented visualization systems. Code and demo videos available at https://osf.io/hd639/.