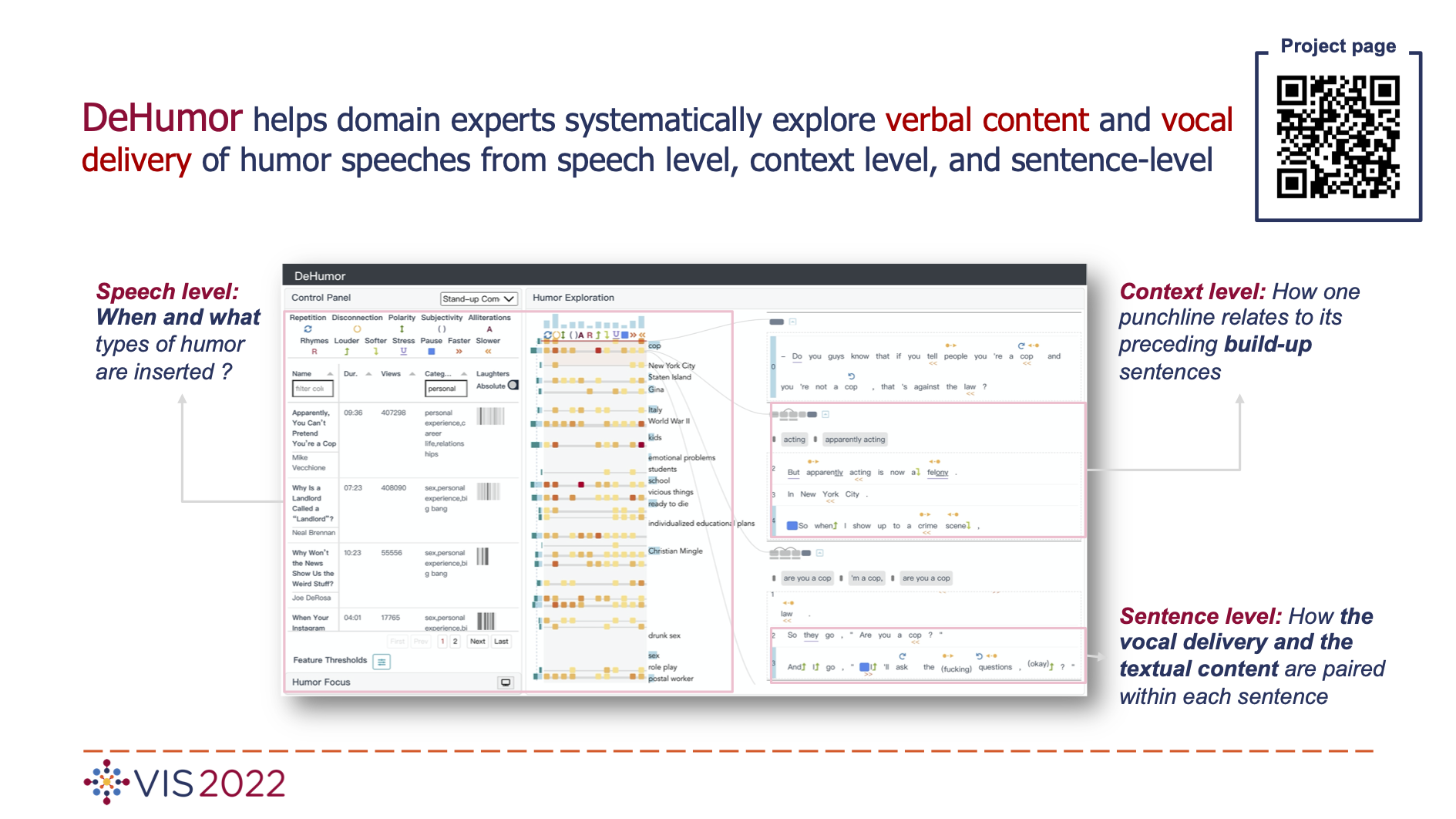

DeHumor: Visual Analytics for Decomposing Humor

Xingbo Wang, Yao Ming, Tongshuang Wu, Haipeng Zeng, Yong Wang, Huamin Qu

View presentation:2022-10-20T21:21:00ZGMT-0600Change your timezone on the schedule page

2022-10-20T21:21:00Z

Prerecorded Talk

The live footage of the talk, including the Q&A, can be viewed on the session page, Text, Language, and Image Data.

Fast forward

Keywords

Humor, Context, Multimodal Features, Visualization

Abstract

Despite being a critical communication skill, grasping humor is challenging—a successful use of humor requires a mixture of both engaging content build-up and an appropriate vocal delivery (e.g., pause). Prior studies on computational humor emphasize the textual and audio features immediately next to the punchline, yet overlooking longer-term context setup. Moreover, the theories are usually too abstract for understanding each concrete humor snippet. To fill in the gap, we develop DeHumor, a visual analytical system for analyzing humorous behaviors in public speaking. To intuitively reveal the building blocks of each concrete example, DeHumor decomposes each humorous video into multimodal features and provides inline annotations of them on the video script. In particular, to better capture the build-ups, we introduce content repetition as a complement to features introduced in theories of computational humor and visualize them in a context linking graph. To help users locate the punchlines that have the desired features to learn, we summarize the content (with keywords) and humor feature statistics on an augmented time matrix. With case studies on stand-up comedy shows and TED talks, we show that DeHumor is able to highlight various building blocks of humor examples. In addition, expert interviews with communication coaches and humor researchers demonstrate the effectiveness of DeHumor for multimodal humor analysis of speech content and vocal delivery.