Reinforcement Learning for Load-balanced Parallel Particle Tracing

Jiayi Xu, Hanqi Guo, Han-Wei Shen, Mukund Raj, Skylar W. Wurster, Tom Peterka

View presentation:2022-10-20T14:36:00ZGMT-0600Change your timezone on the schedule page

2022-10-20T14:36:00Z

Prerecorded Talk

The live footage of the talk, including the Q&A, can be viewed on the session page, ML for VIS.

Fast forward

Keywords

Distributed and parallel particle tracing, dynamic load balancing, reinforcement learning.

Abstract

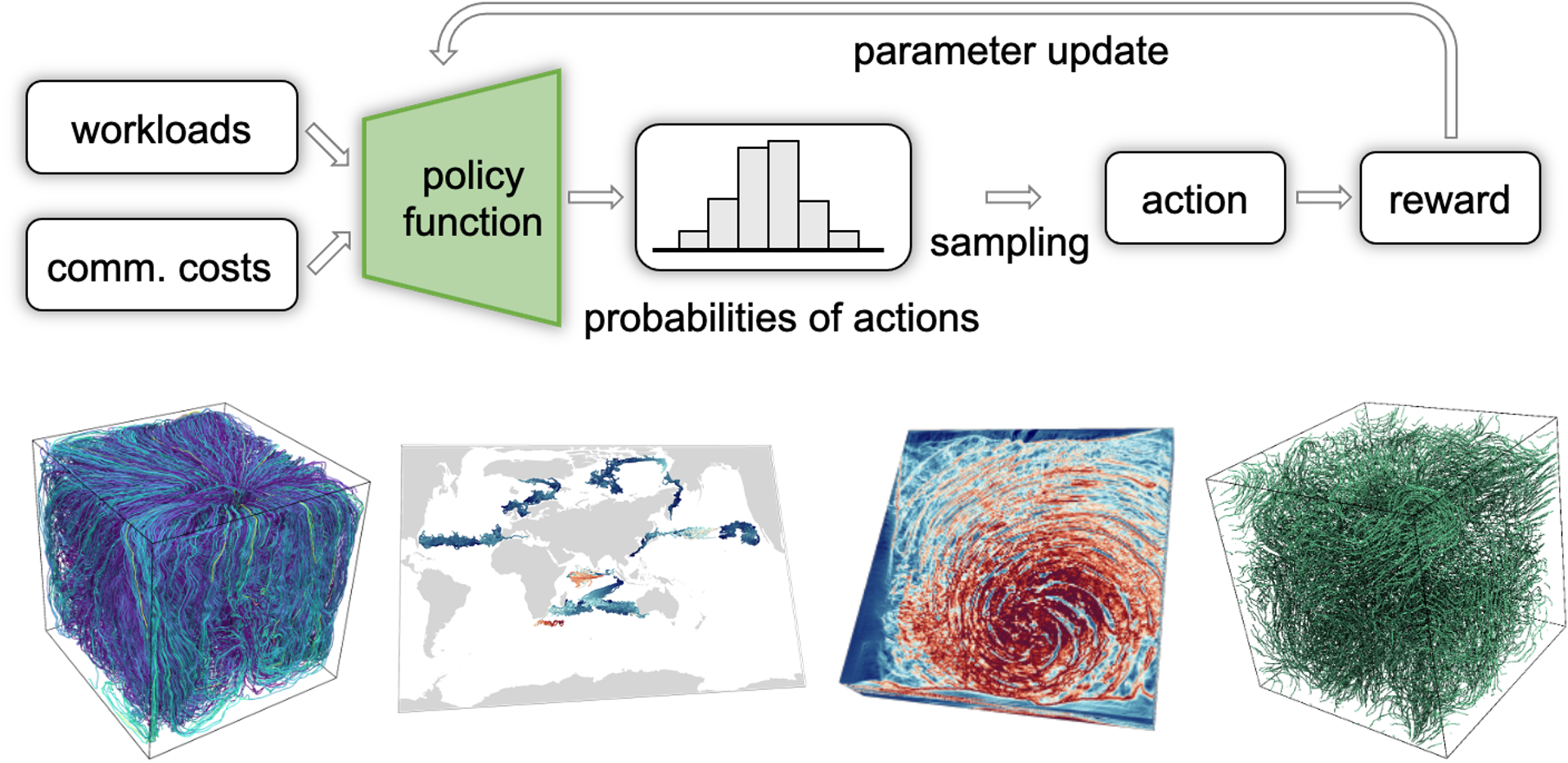

We explore an online reinforcement learning (RL) paradigm to dynamically optimize parallel particle tracing performance in distributed-memory systems. Our method combines three novel components: (1) a work donation algorithm, (2) a high-order workload estimation model, and (3) a communication cost model. First, we design an RL-based work donation algorithm. Our algorithm monitors workloads of processes and creates RL agents to donate data blocks and particles from high-workload processes to low-workload processes to minimize program execution time. The agents learn the donation strategy on the fly based on reward and cost functions designed to consider processes' workload changes and data transfer costs of donation actions. Second, we propose a workload estimation model, helping RL agents estimate the workload distribution of processes in future computations. Third, we design a communication cost model that considers both block and particle data exchange costs, helping RL agents make effective decisions with minimized communication costs. We demonstrate that our algorithm adapts to different flow behaviors in large-scale fluid dynamics, ocean, and weather simulation data. Our algorithm improves parallel particle tracing performance in terms of parallel efficiency, load balance, and costs of I/O and communication for evaluations with up to 16,384 processors.