Evaluating Situated Visualization with Eye Tracking

Kuno Kurzhals, Michael Becher, Nelusa Pathmanathan, Guido Reina

View presentation:2022-10-17T16:45:00ZGMT-0600Change your timezone on the schedule page

2022-10-17T16:45:00Z

The live footage of the talk, including the Q&A, can be viewed on the session page, BELIV: Paper Session 1.

Abstract

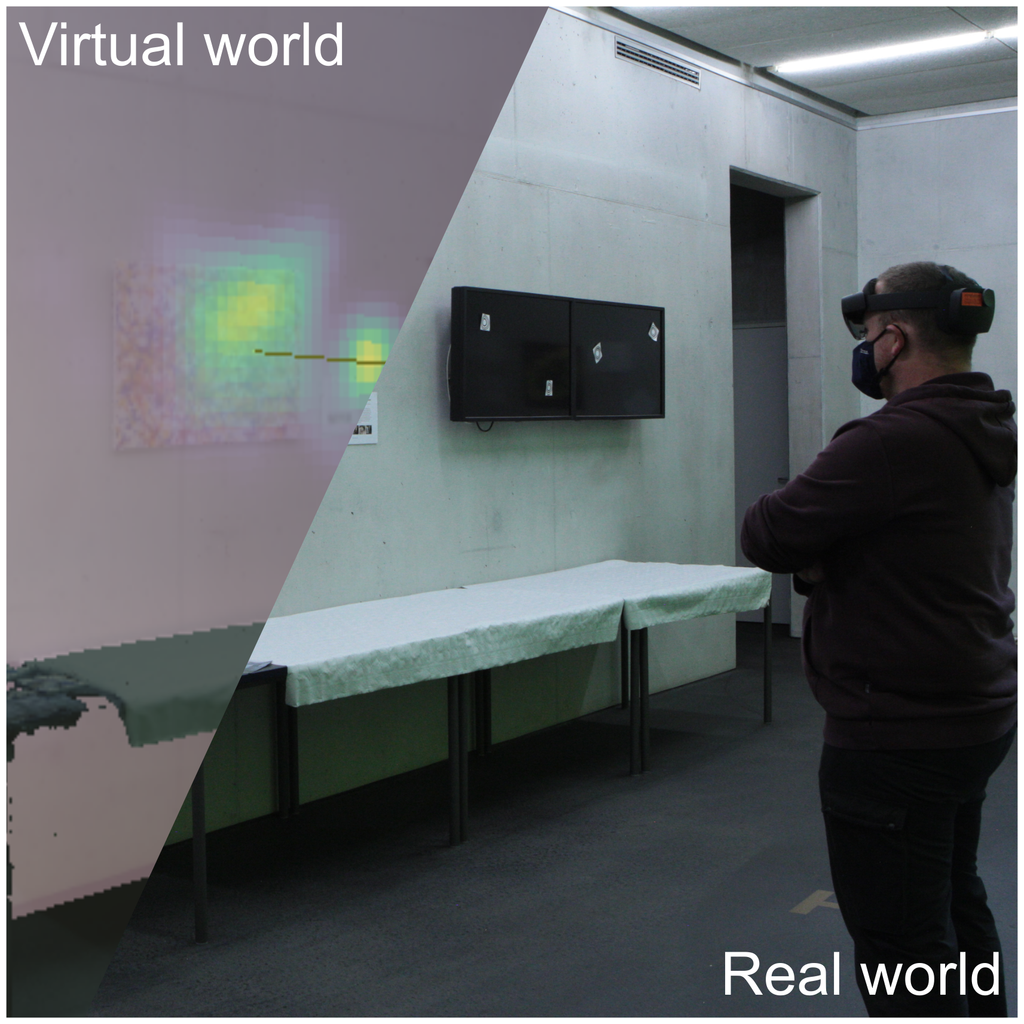

Augmented reality (AR) technology provides means for embedding visualization in a real-world context. Such techniques allow situated analyses of live data in their spatial domain. However, as existing techniques have to be adapted for this context and new approaches will be developed, the evaluation thereof poses new challenges for researchers. Apart from established performance measures, eye tracking has proven to be a valuable means to assess visualizations qualitatively and quantitatively. We discuss the challenges and opportunities of eye tracking for the evaluation of situated visualizations. We envision that an extension of gaze-based evaluation methodology into this field will provide new insights on how people perceive and interact with visualizations in augmented reality.