Joint Neural Phase Retrieval and Compression for Energy- and Computation-efficient Holography on the Edge

Yujie Wang, Praneeth Chakravarthula, Qi Sun, Baoquan Chen

View presentation:2022-10-19T16:09:00ZGMT-0600Change your timezone on the schedule page

2022-10-19T16:09:00Z

Prerecorded Talk

The live footage of the talk, including the Q&A, can be viewed on the session page, SIGGRAPH Invited Talks.

Fast forward

Keywords

Computer generated holography, neural hologram generation, hologram compression

Abstract

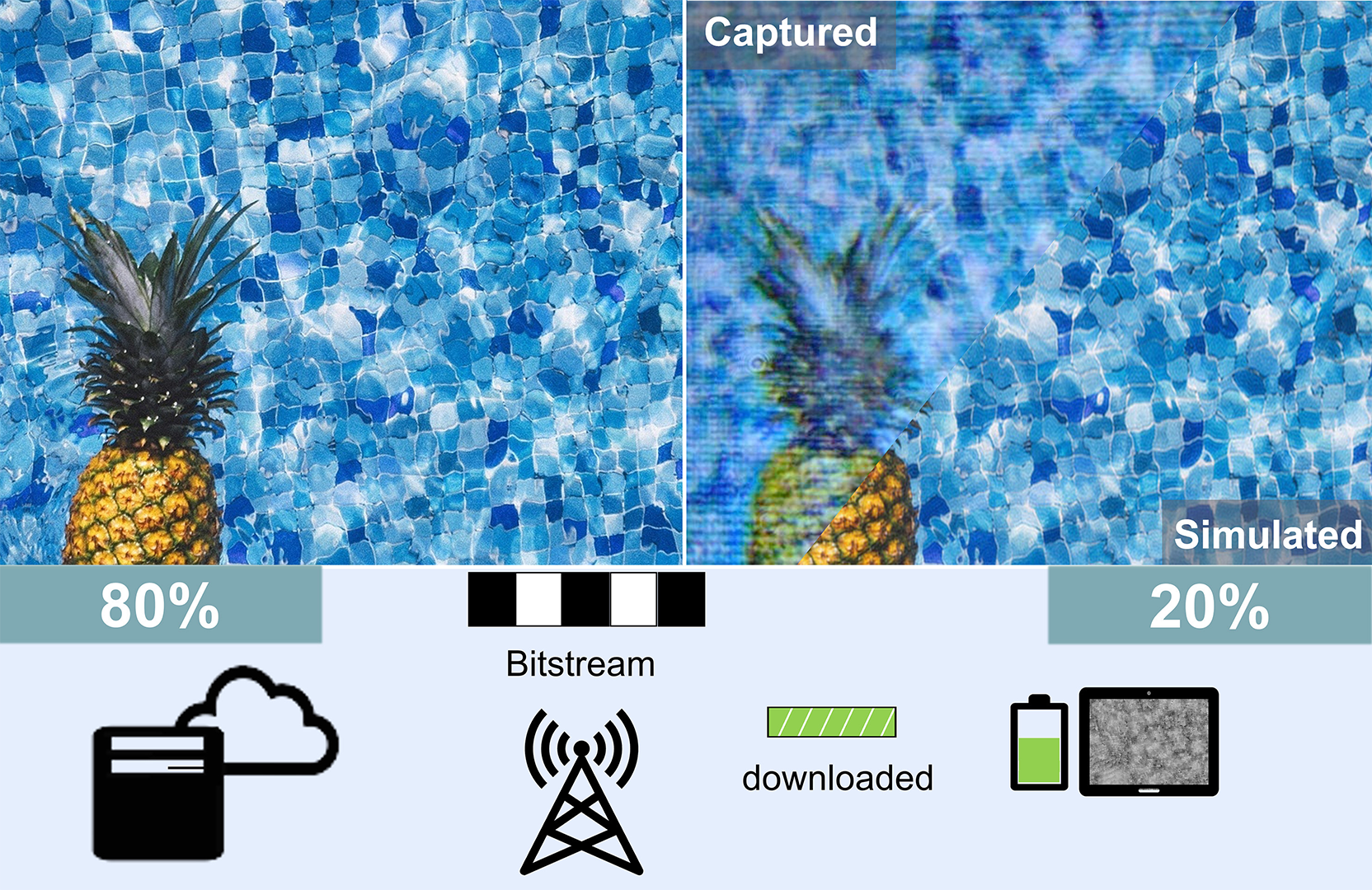

Recent deep learning approaches have shown remarkable promise to enable high fidelity holographic displays. However, lightweight wearable display devices cannot afford the computation demand and energy consumption for hologram generation due to the limited onboard compute capability and battery life. On the other hand, if the computation is conducted entirely remotely on a cloud server, transmitting lossless hologram data is not only challenging but also result in prohibitively high latency and storage. In this work, by distributing the computation and optimizing the transmission, we propose the first framework that jointly generates and compresses high-quality phase-only holograms. Specifically, our framework asymmetrically separates the hologram generation process into high-compute remote encoding (on the server), and low-compute decoding (on the edge) stages. Our encoding enables light weight latent space data, thus faster and efficient transmission to the edge device. With our framework, we observed a reduction of 76% computation and consequently 83% in energy cost on edge devices, compared to the existing hologram generation methods. Our framework is robust to transmission and decoding errors, and approach high image fidelity for as low as 2 bits-per-pixel, and further reduced average bit-rates and decoding time for holographic videos.