Spelunking the Deep: Guaranteed Queries on General Neural Implicit Surfaces

Nicholas Sharp, Alec Jacobson

View presentation:2022-10-19T16:21:00ZGMT-0600Change your timezone on the schedule page

2022-10-19T16:21:00Z

Prerecorded Talk

The live footage of the talk, including the Q&A, can be viewed on the session page, SIGGRAPH Invited Talks.

Fast forward

Keywords

implicit surfaces, neural networks, range analysis, geometry processing

Abstract

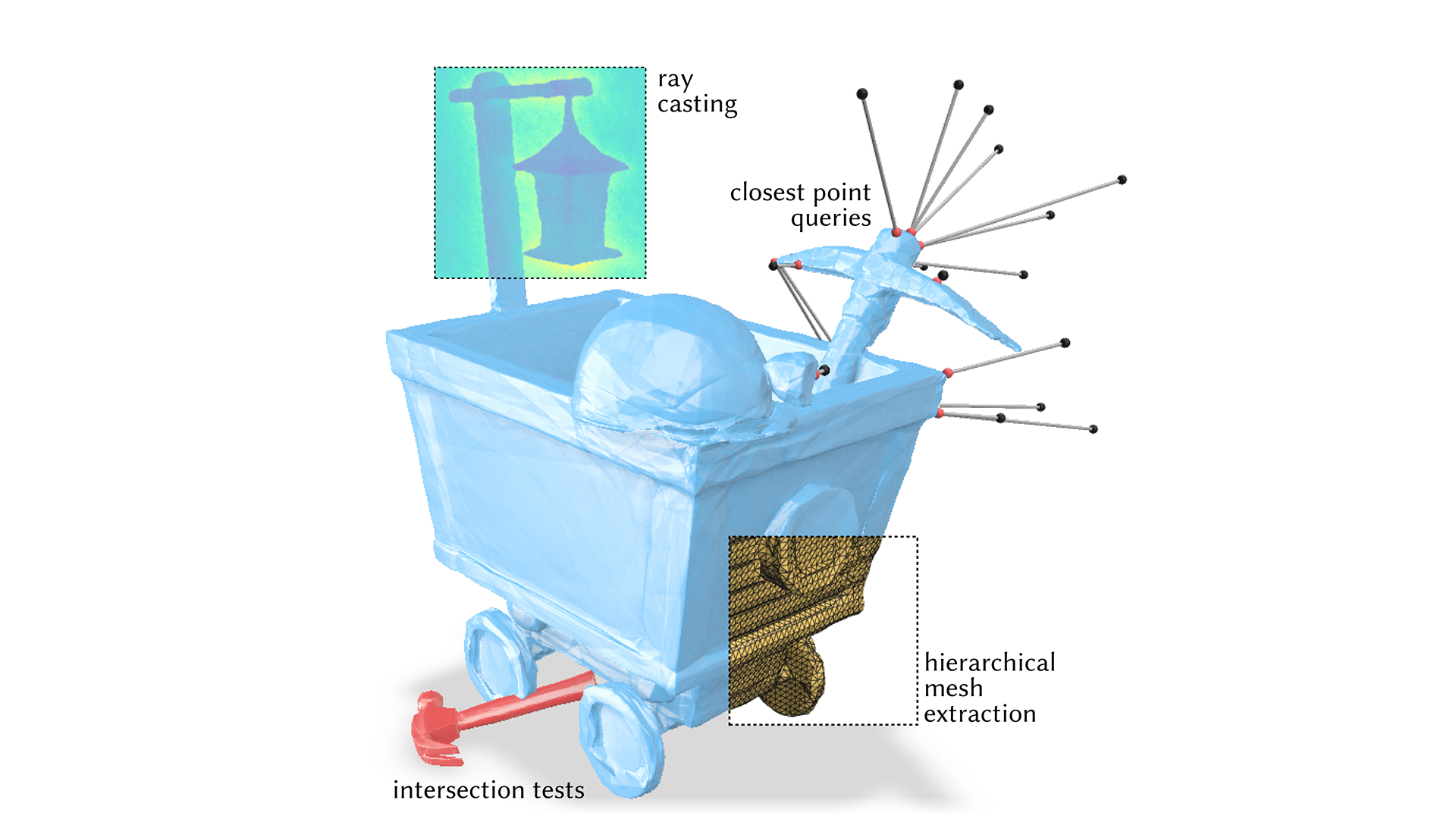

Neural implicit representations, which encode a surface as the level set of a neural network applied to spatial coordinates, have proven to be remarkably effective for optimizing, compressing, and generating 3D geometry. Although these representations are easy to fit, it is not clear how to best evaluate geometric queries on the shape, such as intersecting against a ray or finding a closest point. The predominant approach is to encourage the network to have a signed distance property. However, this property typically holds only approximately, leading to robustness issues, and holds only at the conclusion of training, inhibiting the use of queries in loss functions. Instead, this work presents a new approach to perform queries directly on general neural implicit functions for a wide range of existing architectures. Our key tool is the application of range analysis to neural networks, using automatic arithmetic rules to bound the output of a network over a region; we conduct a study of range analysis on neural networks, and identify variants of affine arithmetic which are highly effective. We use the resulting bounds to develop geometric queries including ray casting, intersection testing, constructing spatial hierarchies, fast mesh extraction, closest-point evaluation, evaluating bulk properties, and more. Our queries can be efficiently evaluated on GPUs, and offer concrete accuracy guarantees even on randomly-initialized networks, enabling their use in training objectives and beyond. We also show a preliminary application to inverse rendering.